Welcome to H2O 3.0

- New Users

- Experienced Users

- Enterprise Users

- Sparkling Water Users

- Python Users

- R Users

- API Users

- Java Users

- Developers

Welcome to the H2O documentation site! Depending on your area of interest, select a learning path from the links above.

We’re glad you’re interested in learning more about H2O - if you have any questions or need general support, please email them to our Google Group, h2ostream or post them on our Google groups forum, h2ostream. This is a public forum, so your question will be visible to other users.

Note: To join our Google group on h2ostream, you need a Google account (such as Gmail or Google+). On the h2ostream page, click the Join group button, then click the New Topic button to post a new message. You don’t need to request or leave a message to join - you should be added to the group automatically.

We welcome your feedback! Please let us know if you have any questions or comments about H2O by clicking the chat balloon button in the lower-right corner in Flow (H2O’s web UI).

Type your question in the entry field that appears at the bottom of the sidebar and you will be connected with an H2O expert who will respond to your query in real time.

New Users

If you’re just getting started with H2O, here are some links to help you learn more:

Recommended Systems: This one-page PDF provides a basic overview of the operating systems, languages and APIs, Hadoop resource manager versions, cloud computing environments, browsers, and other resources recommended to run H2O. At a minimum, we recommend the following for compatibility with H2O:

- Operating Systems: Windows 7 or later; OS X 10.9 or later, Ubuntu 12.04, or RHEL/CentOS 6 or later

- Languages: Java 7 or later; Scala v 2.10 or later; R v.3 or later; Python 2.7.x or later (Scala, R, and Python are not required to use H2O unless you want to use H2O in those environments, but Java is always required)

- Browsers: Latest version of Chrome, Firefox, Safari, or Internet Explorer (An internet browser is required to use H2O’s web UI, Flow)

- Hadoop: Cloudera CDH 5.2 or later (5.3 is recommended); MapR v.3.1.1 or later; Hortonworks HDP 2.1 or later (Hadoop is not required to run H2O unless you want to deploy H2O on a Hadoop cluster)

- Spark: v 1.3 or later (Spark is only required if you want to run Sparkling Water)

Downloads page: First things first - download a copy of H2O here by selecting a build under “Download H2O” (the “Bleeding Edge” build contains the latest changes, while the latest alpha release is a more stable build), then use the installation instruction tabs to install H2O on your client of choice (standalone, R, Python, Hadoop, or Maven) .

For first-time users, we recommend downloading the latest alpha release and the default standalone option (the first tab) as the installation method. Make sure to install Java if it is not already installed.

Tutorials: To see a step-by-step example of our algorithms in action, select a model type from the following list:

Getting Started with Flow: This document describes our new intuitive web interface, Flow. This interface is similar to IPython notebooks, and allows you to create a visual workflow to share with others.

Launch from the command line: This document describes some of the additional options that you can configure when launching H2O (for example, to specify a different directory for saved Flow data, allocate more memory, or use a flatfile for quick configuration of a cluster).

Algorithms: This document describes the science behind our algorithms and provides a detailed, per-algo view of each model type.

Experienced Users

If you’ve used previous versions of H2O, the following links will help guide you through the process of upgrading to H2O 3.0.

Recommended Systems: This one-page PDF provides a basic overview of the operating systems, languages and APIs, Hadoop resource manager versions, cloud computing environments, browsers, and other resources recommended to run H2O.

Migration Guide: This document provides a comprehensive guide to assist users in upgrading to H2O 3.0. It gives an overview of the changes to the algorithms and the web UI introduced in this version and describes the benefits of upgrading for users of R, APIs, and Java.

Porting R Scripts: This document is designed to assist users who have created R scripts using previous versions of H2O. Due to the many improvements in R, scripts created using previous versions of H2O need some revision to work with H2O 3.0. This document provides a side-by-side comparison of the changes in R for each algorithm, as well as overall structural enhancements R users should be aware of, and provides a link to a tool that assists users in upgrading their scripts.

Recent Changes: This document describes the most recent changes in the latest build of H2O. It lists new features, enhancements (including changed parameter default values), and bug fixes for each release, organized by sub-categories such as Python, R, and Web UI.

H2O Classic vs H2O 3.0: This document presents a side-by-side comparison of H2O 3.0 and the previous version of H2O. It compares and contrasts the features, capabilities, and supported algorithms between the versions. If you’d like to learn more about the benefits of upgrading, this is a great source of information.

Algorithms Roadmap: This document outlines our currently implemented features and describes which features are planned for future software versions. If you’d like to know what’s up next for H2O, this is the place to go.

Contributing code: If you’re interested in contributing code to H2O, we appreciate your assistance! This document describes how to access our list of Jiras that are suggested tasks for contributors and how to contact us.

Enterprise Users

If you’re considering using H2O in an enterprise environment, you’ll be happy to know that the H2O platform is supported on all major Hadoop distributions including Cloudera Enterprise, Hortonworks Data Platform and the MapR Apache Hadoop Distribution.

H2O can be deployed in-memory directly on top of existing Hadoop clusters without the need for data transfers, allowing for unmatched speed and ease of use. To ensure the integrity of data stored in Hadoop clusters, the H2O platform supports native integration of the Kerberos protocol.

For additional sales or marketing assistance, please email sales@h2o.ai.

Recommended Systems: This one-page PDF provides a basic overview of the operating systems, languages and APIs, Hadoop resource manager versions, cloud computing environments, browsers, and other resources recommended to run H2O.

H2O Enterprise Edition: This web page describes the benefits of H2O Enterprise Edition.

Security: This document describes how to use the security features (available only in H2O Enterprise Edition).

- How to Pass S3 Credentials to H2O: This document describes the necessary step of passing your S3 credentials to H2O so that H2O can be used with AWS, as well as how to run H2O on an EC2 cluster.

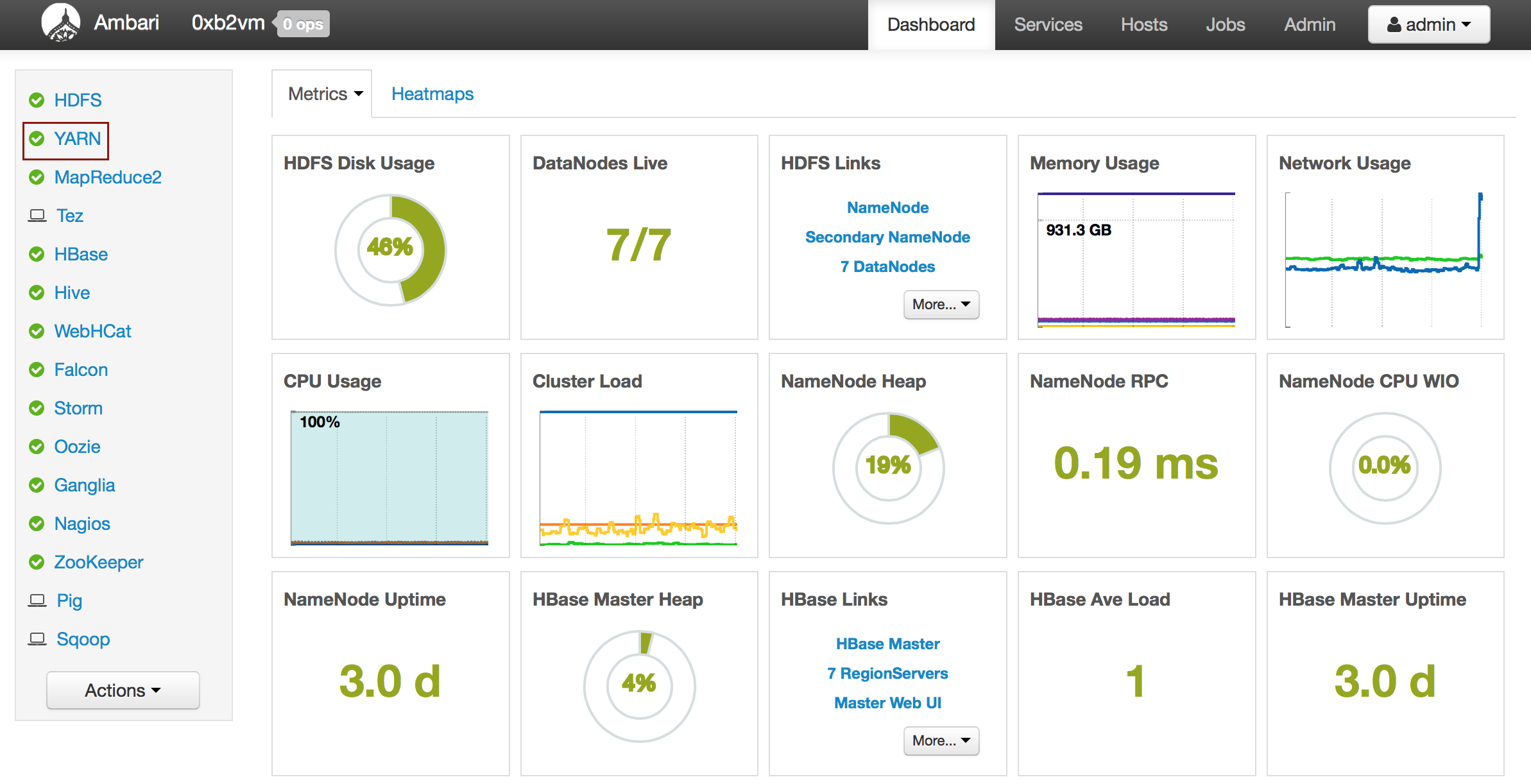

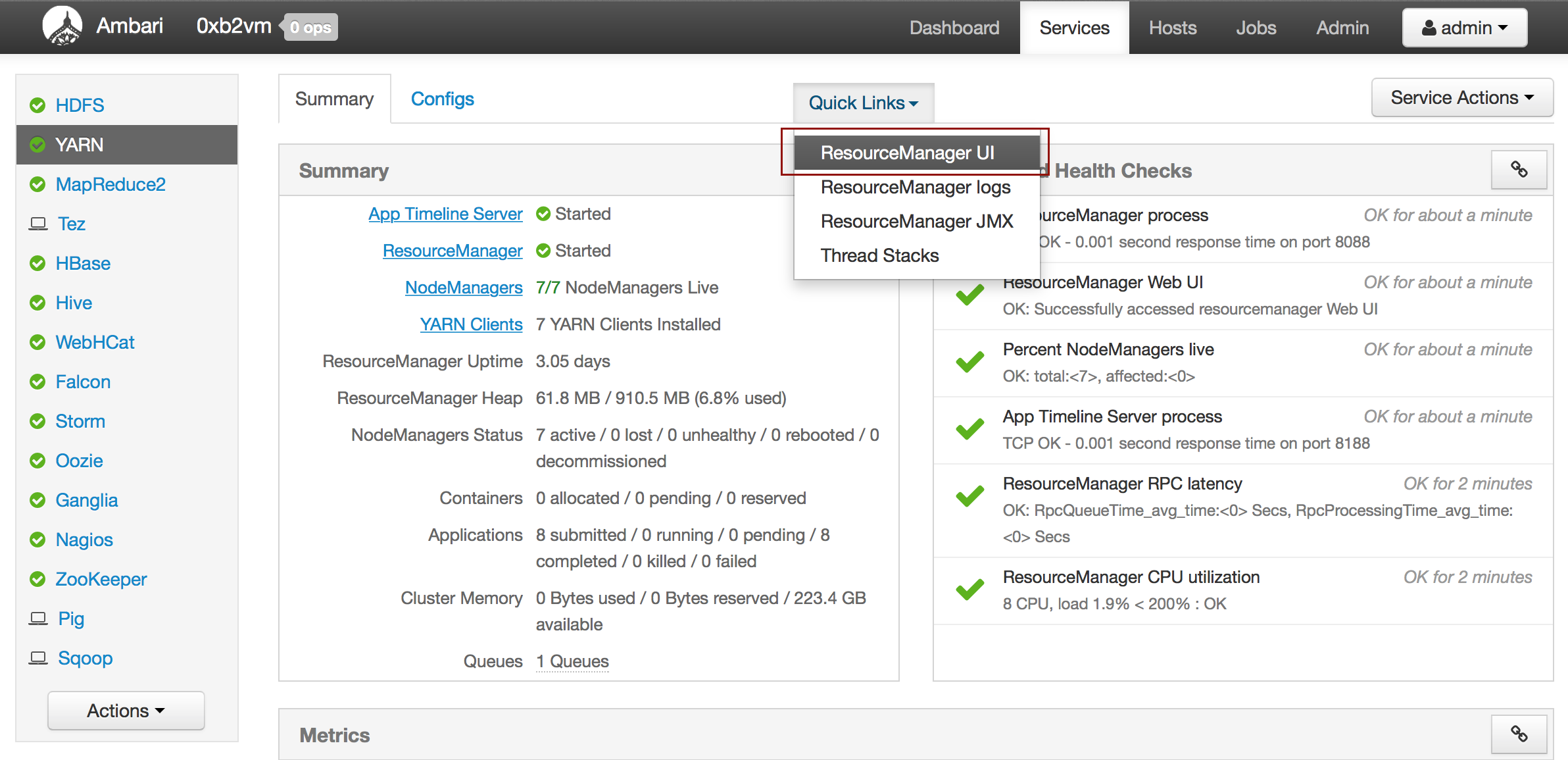

Click here to view instructions on how to set up H2O using Hadoop.

Running H2O on Hadoop: This document describes how to run H2O on Hadoop.

Sparkling Water Users

- Getting Started with Sparkling Water

- Sparkling Water Blog Posts

- Sparkling Water Meetup Slide Decks

- PySparkling

Sparkling Water is a gradle project with the following submodules:

- Core: Implementation of H2OContext, H2ORDD, and all technical integration code

- Examples: Application, demos, examples

- ML: Implementation of MLLib pipelines for H2O algorithms

- Assembly: Creates “fatJar” composed of all other modules

- py: Implementation of (h2o) Python binding to Sparkling Water

The best way to get started is to modify the core module or create a new module, which extends a project.

Users of our Spark-compatible solution, Sparkling Water, should be aware that Sparkling Water is only supported with the latest version of H2O. For more information about Sparkling Water, refer to the following links.

Sparkling Water is versioned according to the Spark versioning, so make sure to use the Sparkling Water version that corresponds to the installed version of Spark:

- Use Sparkling Water 1.2 for Spark 1.2

- Use Sparkling Water 1.3 for Spark 1.3+

- Use Sparkling Water 1.4 for Spark 1.4

- Use Sparkling Water 1.5 for Spark 1.5

Getting Started with Sparkling Water

Download Sparkling Water: Go here to download Sparkling Water.

Sparkling Water Development Documentation: Read this document first to get started with Sparkling Water.

Launch on Hadoop and Import from HDFS: Go here to learn how to start Sparkling Water on Hadoop.

Sparkling Water Tutorials: Go here for demos and examples.

Sparkling Water K-means Tutorial: Go here to view a demo that uses Scala to create a K-means model.

Sparkling Water GBM Tutorial: Go here to view a demo that uses Scala to create a GBM model.

Sparkling Water on YARN: Follow these instructions to run Sparkling Water on a YARN cluster.

Building Applications on top of H2O: This short tutorial describes project building and demonstrates the capabilities of Sparkling Water using Spark Shell to build a Deep Learning model.

Sparkling Water FAQ: This FAQ provides answers to many common questions about Sparkling Water.

Connecting RStudio to Sparkling Water: This illustrated tutorial describes how to use RStudio to connect to Sparkling Water.

Sparkling Water Blog Posts

Sparkling Water Meetup Slide Decks

PySparkling

Note: PySparkling requires Sparkling Water 1.5 or later.

H2O’s PySparkling package is not available through pip (there is another similarly-named package). H2O’s PySparkling package requires EasyInstall.

To install H2O’s PySparkling package, use the egg file included in the distribution.

- Download Spark 1.5.1.

- Set the

SPARK_HOMEandMASTERvariables as described on the Downloads page. - Download Sparkling Water 1.5

- In the unpacked Sparkling Water directory, run the following command:

easy_install --upgrade sparkling-water-1.5.6/py/dist/pySparkling-1.5.6-py2.7.egg

Python Users

Pythonistas will be glad to know that H2O now provides support for this popular programming language. Python users can also use H2O with IPython notebooks. For more information, refer to the following links.

Click here to view instructions on how to use H2O with Python.

Python readme: This document describes how to setup and install the prerequisites for using Python with H2O.

Python docs: This document represents the definitive guide to using Python with H2O.

Python Parity: This document is is a list of Python capabilities that were previously available only through the H2O R interface but are now available in H2O using the Python interface.

Grid Search in Python: This notebook demonstrates the use of grid search in Python.

R Users

Don’t worry, R users - we still provide R support in the latest version of H2O, just as before. The R components of H2O have been cleaned up, simplified, and standardized, so the command format is easier and more intuitive. Due to these improvements, be aware that any scripts created with previous versions of H2O will need some revision to be compatible with the latest version.

We have provided the following helpful resources to assist R users in upgrading to the latest version, including a document that outlines the differences between versions and a tool that reviews scripts for deprecated or renamed parameters.

Currently, the only version of R that is known to be incompatible with H2O is R version 3.1.0 (codename “Spring Dance”). If you are using that version, we recommend upgrading the R version before using H2O.

To check which version of H2O is installed in R, use versions::installed.versions("h2o").

Click here to view instructions for using H2O with R.

R User Documentation: This document contains all commands in the H2O package for R, including examples and arguments. It represents the definitive guide to using H2O in R.

Porting R Scripts: This document is designed to assist users who have created R scripts using previous versions of H2O. Due to the many improvements in R, scripts created using previous versions of H2O will not work. This document provides a side-by-side comparison of the changes in R for each algorithm, as well as overall structural enhancements R users should be aware of, and provides a link to a tool that assists users in upgrading their scripts.

Connecting RStudio to Sparkling Water: This illustrated tutorial describes how to use RStudio to connect to Sparkling Water.

Ensembles

Ensemble machine learning methods use multiple learning algorithms to obtain better predictive performance.

H2O Ensemble GitHub repository: Location for the H2O Ensemble R package.

Ensemble Documentation: This documentation provides more details on the concepts behind ensembles and how to use them.

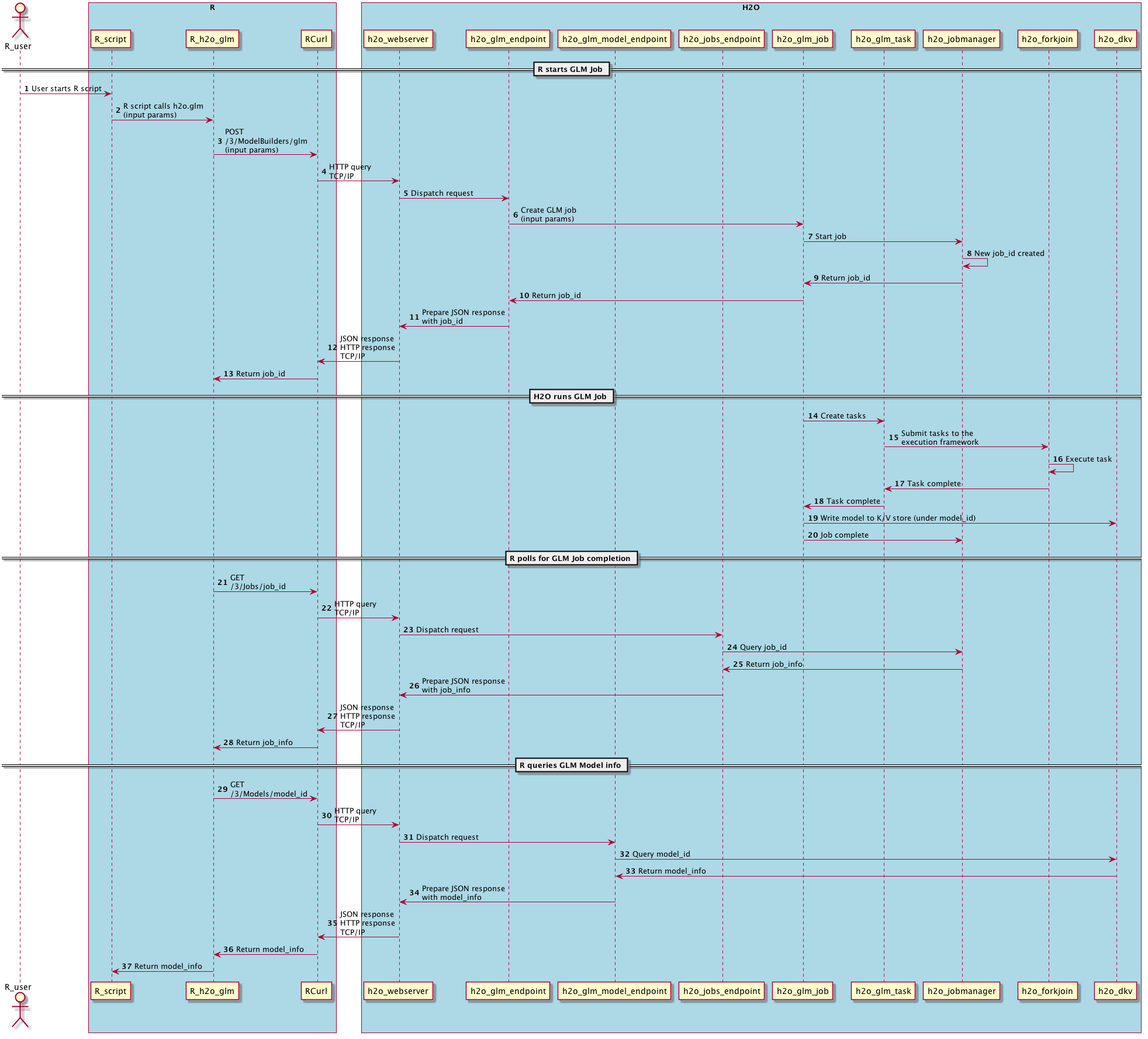

API Users

API users will be happy to know that the APIs have been more thoroughly documented in the latest release of H2O and additional capabilities (such as exporting weights and biases for Deep Learning models) have been added.

REST APIs are generated immediately out of the code, allowing users to implement machine learning in many ways. For example, REST APIs could be used to call a model created by sensor data and to set up auto-alerts if the sensor data falls below a specified threshold.

H2O 3 REST API Overview: This document describes how the REST API commands are used in H2O, versioning, experimental APIs, verbs, status codes, formats, schemas, payloads, metadata, and examples.

REST API Reference: This document represents the definitive guide to the H2O REST API.

REST API Schema Reference: This document represents the definitive guide to the H2O REST API schemas.

H2O 3 REST API Overview: This document provides an overview of how APIs are used in H2O, including versioning, URLs, HTTP verbs, status codes, formats, schemas, and examples.

Java Users

For Java developers, the following resources will help you create your own custom app that uses H2O.

H2O Core Java Developer Documentation: The definitive Java API guide for the core components of H2O.

H2O Algos Java Developer Documentation: The definitive Java API guide for the algorithms used by H2O.

h2o-genmodel (POJO) Javadoc: Provides a step-by-step guide to creating and implementing POJOs in a Java application.

SDK Information

The Java API is generated and accessible from the download page.

Developers

If you’re looking to use H2O to help you develop your own apps, the following links will provide helpful references.

For the latest version of IDEA IntelliJ, run ./gradlew idea, then click File > Open within IDEA. Select the .ipr file in the repository and click the Choose button.

For older versions of IDEA IntelliJ, run ./gradlew idea, then Import Project within IDEA and point it to the h2o-3 directory.

Note: This process will take longer, so we recommend using the first method if possible.

For JUnit tests to pass, you may need multiple H2O nodes. Create a “Run/Debug” configuration with the following parameters:

Type: Application

Main class: H2OApp

Use class path of module: h2o-app

After starting multiple “worker” node processes in addition to the JUnit test process, they will cloud up and run the multi-node JUnit tests.

Recommended Systems: This one-page PDF provides a basic overview of the operating systems, languages and APIs, Hadoop resource manager versions, cloud computing environments, browsers, and other resources recommended to run H2O.

Developer Documentation: Detailed instructions on how to build and launch H2O, including how to clone the repository, how to pull from the repository, and how to install required dependencies.

Click here to view instructions on how to use H2O with Maven.

Maven install: This page provides information on how to build a version of H2O that generates the correct IDE files.

apps.h2o.ai: Apps.h2o.ai is designed to support application developers via events, networking opportunities, and a new, dedicated website comprising developer kits and technical specs, news, and product spotlights.

H2O Project Templates: This page provides template info for projects created in Java, Scala, or Sparkling Water.

H2O Scala API Developer Documentation: The definitive Scala API guide for H2O.

Hacking Algos: This blog post by Cliff walks you through building a new algorithm, using K-Means, Quantiles, and Grep as examples.

KV Store Guide: Learn more about performance characteristics when implementing new algorithms.

- Contributing code: If you’re interested in contributing code to H2O, we appreciate your assistance! This document describes how to access our list of Jiras that contributors can work on and how to contact us. Note: To access this link, you must have an Atlassian account.

Downloading H2O

To download H2O, go to our downloads page. Select a build type (bleeding edge or latest alpha), then select an installation method (standalone, R, Python, Hadoop, or Maven) by clicking the tabs at the top of the page. Follow the instructions in the tab to install H2O.

Starting H2O …

There are a variety of ways to start H2O, depending on which client you would like to use.

… From R

To use H2O in R, follow the instructions on the download page.

… From Python

To use H2O in Python, follow the instructions on the download page.

… On Spark

To use H2O on Spark, follow the instructions on the Sparkling Water download page.

… From the Cmd Line

You can use Terminal (OS X) or the Command Prompt (Windows) to launch H2O 3.0. When you launch from the command line, you can include additional instructions to H2O 3.0, such as how many nodes to launch, how much memory to allocate for each node, assign names to the nodes in the cloud, and more.

Note: H2O requires some space in the

/tmpdirectory to launch. If you cannot launch H2O, try freeing up some space in the/tmpdirectory, then try launching H2O again.

For more detailed instructions on how to build and launch H2O, including how to clone the repository, how to pull from the repository, and how to install required dependencies, refer to the developer documentation.

There are two different argument types:

- JVM arguments

- H2O arguments

The arguments use the following format: java <JVM Options> -jar h2o.jar <H2O Options>.

JVM Options

-version: Display Java version info.-Xmx<Heap Size>: To set the total heap size for an H2O node, configure the memory allocation option-Xmx. By default, this option is set to 1 Gb (-Xmx1g). When launching nodes, we recommend allocating a total of four times the memory of your data.

Note: Do not try to launch H2O with more memory than you have available.

H2O Options

-hor-help: Display this information in the command line output.-name <H2OCloudName>: Assign a name to the H2O instance in the cloud (where<H2OCloudName>is the name of the cloud. Nodes with the same cloud name will form an H2O cloud (also known as an H2O cluster).-flatfile <FileName>: Specify a flatfile of IP address for faster cloud formation (where<FileName>is the name of the flatfile.-ip <IPnodeAddress>: Specify an IP address other than the defaultlocalhostfor the node to use (where<IPnodeAddress>is the IP address).-port <#>: Specify a port number other than the default54321for the node to use (where<#>is the port number).-network ###.##.##.#/##: Specify an IP addresses (where###.##.##.#/##represents the IP address and subnet mask). The IP address discovery code binds to the first interface that matches one of the networks in the comma-separated list; to specify an IP address, use-network. To specify a range, use a comma to separate the IP addresses:-network 123.45.67.0/22,123.45.68.0/24. For example,10.1.2.0/24supports 256 possibilities.-ice_root <fileSystemPath>: Specify a directory for H2O to spill temporary data to disk (where<fileSystemPath>is the file path).-flow_dir <server-side or HDFS directory>: Specify a directory for saved flows. The default is/Users/h2o-<H2OUserName>/h2oflows(where<H2OUserName>is your user name).-nthreads <#ofThreads>: Specify the maximum number of threads in the low-priority batch work queue (where<#ofThreads>is the number of threads). The default is 99.-client: Launch H2O node in client mode. This is used mostly for running Sparkling Water.

Cloud Formation Behavior

New H2O nodes join to form a cloud during launch. After a job has started on the cloud, it prevents new members from joining.

To start an H2O node with 4GB of memory and a default cloud name:

java -Xmx4g -jar h2o.jarTo start an H2O node with 6GB of memory and a specific cloud name:

java -Xmx6g -jar h2o.jar -name MyCloudTo start an H2O cloud with three 2GB nodes using the default cloud names:

java -Xmx2g -jar h2o.jar & java -Xmx2g -jar h2o.jar & java -Xmx2g -jar h2o.jar &

Wait for the INFO: Registered: # schemas in: #mS output before entering the above command again to add another node (the number for # will vary).

Flatfile Configuration for Multi-Node Clusters

Running H2O on a multi-node cluster allows you to use more memory for large-scale tasks (for example, creating models from huge datasets) than would be possible on a single node.

If you are configuring many nodes, using the -flatfile option is fast and easy. The -flatfile option is used to define a list of potential cloud peers. However, it is not an alternative to -ip and -port, which should be used to bind the IP and port address of the node you are using to launch H2O.

To configure H2O on a multi-node cluster:

- Locate a set of hosts that will be used to create your cluster. A host can be a server, an EC2 instance, or your laptop.

- Download the appropriate version of H2O for your environment.

- Verify the same h2o.jar file is available on each host in the multi-node cluster.

Create a flatfile.txt that contains an IP address and port number for each H2O instance. Use one entry per line. For example:

192.168.1.163:54321 192.168.1.164:54321- Copy the flatfile.txt to each node in the cluster.

Use the

-Xmxoption to specify the amount of memory for each node. The cluster’s memory capacity is the sum of all H2O nodes in the cluster.For example, if you create a cluster with four 20g nodes (by specifying

-Xmx20gfour times), H2O will have a total of 80 gigs of memory available.For best performance, we recommend sizing your cluster to be about four times the size of your data. To avoid swapping, the

-Xmxallocation must not exceed the physical memory on any node. Allocating the same amount of memory for all nodes is strongly recommended, as H2O works best with symmetric nodes.Note the optional

-ipand-portoptions specify the IP address and ports to use. The-ipoption is especially helpful for hosts with multiple network interfaces.java -Xmx20g -jar h2o.jar -flatfile flatfile.txt -port 54321The output will resemble the following:

04-20 16:14:00.253 192.168.1.70:54321 2754 main INFO: 1. Open a terminal and run 'ssh -L 55555:localhost:54321 H2O-3User@###.###.#.##' 04-20 16:14:00.253 192.168.1.70:54321 2754 main INFO: 2. Point your browser to http://localhost:55555 04-20 16:14:00.437 192.168.1.70:54321 2754 main INFO: Log dir: '/tmp/h2o-H2O-3User/h2ologs' 04-20 16:14:00.437 192.168.1.70:54321 2754 main INFO: Cur dir: '/Users/H2O-3User/h2o-3' 04-20 16:14:00.459 192.168.1.70:54321 2754 main INFO: HDFS subsystem successfully initialized 04-20 16:14:00.460 192.168.1.70:54321 2754 main INFO: S3 subsystem successfully initialized 04-20 16:14:00.460 192.168.1.70:54321 2754 main INFO: Flow dir: '/Users/H2O-3User/h2oflows' 04-20 16:14:00.475 192.168.1.70:54321 2754 main INFO: Cloud of size 1 formed [/192.168.1.70:54321]As you add more nodes to your cluster, the output is updated:

INFO WATER: Cloud of size 2 formed [/...]...Access the H2O 3.0 web UI (Flow) with your browser. Point your browser to the HTTP address specified in the output

Listening for HTTP and REST traffic on ....

… On EC2 and S3

Note: If you would like to try out H2O on an EC2 cluster, play.h2o.ai is the easiest way to get started. H2O Play provides access to a temporary cluster managed by H2O.

If you would still like to set up your own EC2 cluster, follow the instructions below.

On EC2

Tested on Redhat AMI, Amazon Linux AMI, and Ubuntu AMI

To use the Amazon Web Services (AWS) S3 storage solution, you will need to pass your S3 access credentials to H2O. This will allow you to access your data on S3 when importing data frames with path prefixes s3n://....

For security reasons, we recommend writing a script to read the access credentials that are stored in a separate file. This will not only keep your credentials from propagating to other locations, but it will also make it easier to change the credential information later.

Standalone Instance

When running H2O in standalone mode using the simple Java launch command, we can pass in the S3 credentials in two ways.

You can pass in credentials in standalone mode the same way as accessing data from HDFS on Hadoop. Create a

core-site.xmlfile and pass it in with the flag-hdfs_config. For an examplecore-site.xmlfile, refer to Core-site.xml.Edit the properties in the core-site.xml file to include your Access Key ID and Access Key as shown in the following example:

<property> <name>fs.s3n.awsAccessKeyId</name> <value>[AWS SECRET KEY]</value> </property> <property> <name>fs.s3n.awsSecretAccessKey</name> <value>[AWS SECRET ACCESS KEY]</value> </property>Launch with the configuration file

core-site.xmlby entering the following in the command line:java -jar h2o.jar -hdfs_config core-site.xml

Import the data using importFile with the S3 url path:

s3n://bucket/path/to/file.csv

You can pass the AWS Access Key and Secret Access Key in an S3N Url in Flow, R, or Python (where

AWS_ACCESS_KEYrepresents your user name andAWS_SECRET_KEYrepresents your password).To import the data from the Flow API:

`importFiles [ "s3n://<AWS_ACCESS_KEY>:<AWS_SECRET_KEY>@bucket/path/to/file.csv" ]`To import the data from the R API:

`h2o.importFile(path = "s3n://<AWS_ACCESS_KEY>:<AWS_SECRET_KEY>@bucket/path/to/file.csv")`To import the data from the Python API:

`h2o.import_frame(path = "s3n://<AWS_ACCESS_KEY>:<AWS_SECRET_KEY>@bucket/path/to/file.csv")`

Multi-Node Instance

Python and the

botoPython library are required to launch a multi-node instance of H2O on EC2. Confirm these dependencies are installed before proceeding.

For more information, refer to the H2O EC2 repo.

Build a cluster of EC2 instances by running the following commands on the host that can access the nodes using a public DNS name.

Edit

h2o-cluster-launch-instances.pyto include your SSH key name and security group name, as well as any other environment-specific variables../h2o-cluster-launch-instances.py ./h2o-cluster-distribute-h2o.sh—OR—

./h2o-cluster-launch-instances.py ./h2o-cluster-download-h2o.shNote: The second method may be faster than the first, since download pulls from S3.

Distribute the credentials using

./h2o-cluster-distribute-aws-credentials.sh.Note: If you are running H2O using an IAM role, it is not necessary to distribute the AWS credentials to all the nodes in the cluster. The latest version of H2O can access the temporary access key.

Caution: Distributing the AWS credentials copies the Amazon

AWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEYto the instances to enable S3 and S3N access. Use caution when adding your security keys to the cloud.Start H2O by launching one H2O node per EC2 instance:

./h2o-cluster-start-h2o.shWait 60 seconds after entering the command before entering it on the next node.

In your internet browser, substitute any of the public DNS node addresses for

IP_ADDRESSin the following example:http://IP_ADDRESS:54321- To start H2O:

./h2o-cluster-start-h2o.sh - To stop H2O:

./h2o-cluster-stop-h2o.sh - To shut down the cluster, use your Amazon AWS console to shut down the cluster manually.

Note: To successfully import data, the data must reside in the same location on all nodes.

- To start H2O:

Core-site.xml Example

The following is an example core-site.xml file:

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!--

<property>

<name>fs.default.name</name>

<value>s3n://<your s3 bucket></value>

</property>

-->

<property>

<name>fs.s3n.awsAccessKeyId</name>

<value>insert access key here</value>

</property>

<property>

<name>fs.s3n.awsSecretAccessKey</name>

<value>insert secret key here</value>

</property>

</configuration>

Launching H2O

- Selecting the Operating System and Virtualization Type

- Configuring the Instance

- Downloading Java and H2O

Note: Before launching H2O on an EC2 cluster, verify that ports 54321 and 54322 are both accessible by TCP and UDP.

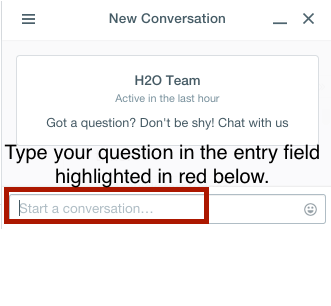

Selecting the Operating System and Virtualization Type

Select your operating system and the virtualization type of the prebuilt AMI on Amazon. If you are using Windows, you will need to use a hardware-assisted virtual machine (HVM). If you are using Linux, you can choose between para-virtualization (PV) and HVM. These selections determine the type of instances you can launch.

For more information about virtualization types, refer to Amazon.

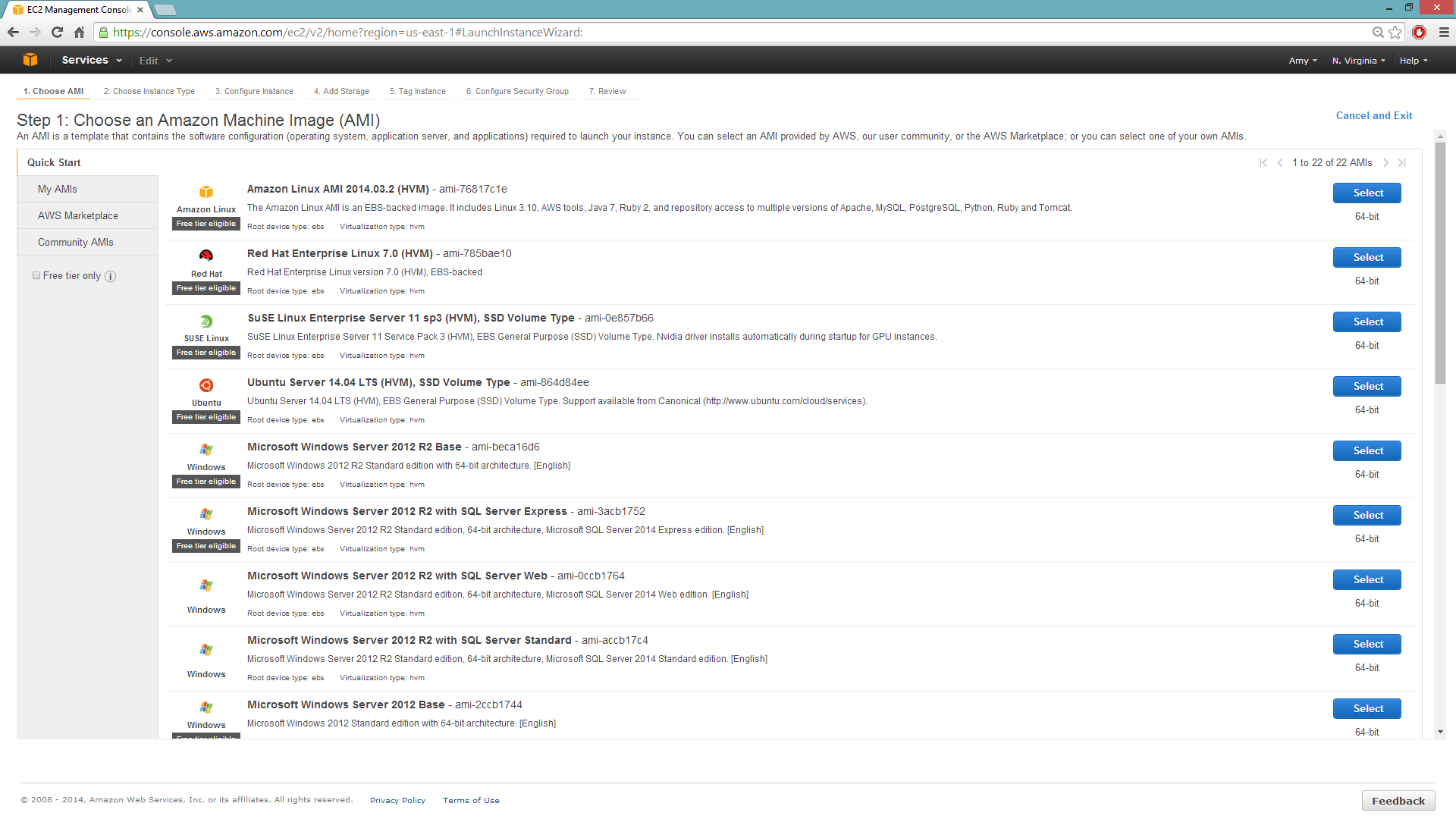

Configuring the Instance

Select the IAM role and policy to use to launch the instance. H2O detects the temporary access keys associated with the instance, so you don’t need to copy your AWS credentials to the instances.

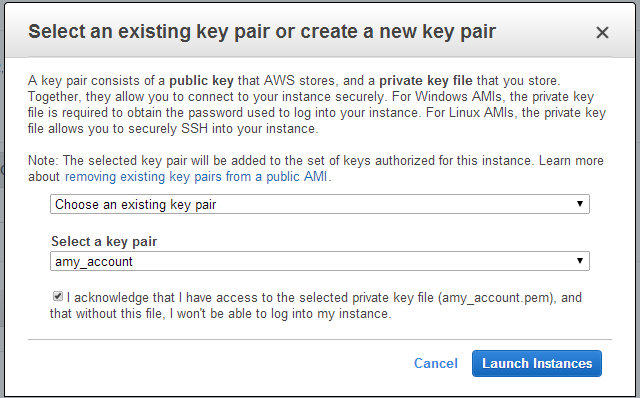

When launching the instance, select an accessible key pair.

(Windows Users) Tunneling into the Instance

For Windows users that do not have the ability to use ssh from the terminal, either download Cygwin or a Git Bash that has the capability to run ssh:

ssh -i amy_account.pem ec2-user@54.165.25.98

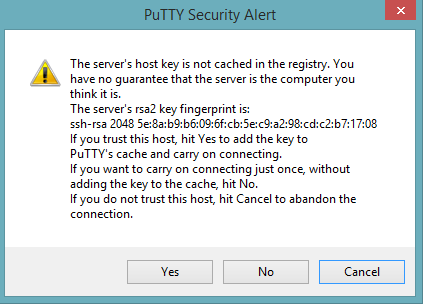

Otherwise, download PuTTY and follow these instructions:

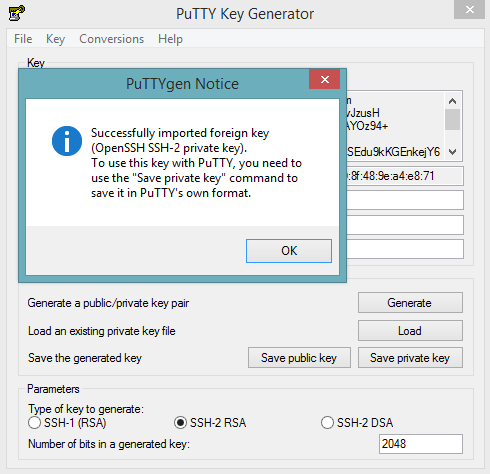

- Launch the PuTTY Key Generator.

- Load your downloaded AWS pem key file. Note: To see the file, change the browser file type to “All”.

Save the private key as a .ppk file.

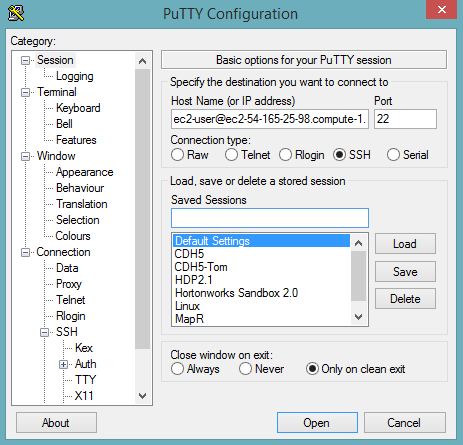

Launch the PuTTY client.

In the Session section, enter the host name or IP address. For Ubuntu users, the default host name is

ubuntu@<ip-address>. For Linux users, the default host name isec2-user@<ip-address>.

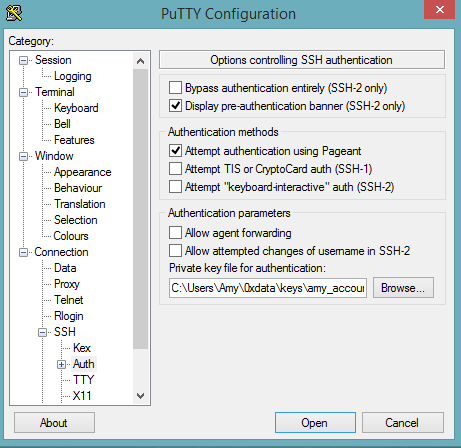

Select SSH, then Auth in the sidebar, and click the Browse button to select the private key file for authentication.

Start a new session and click the Yes button to confirm caching of the server’s rsa2 key fingerprint and continue connecting.

Downloading Java and H2O

- Download Java (JDK 1.7 or later) if it is not already available on the instance.

To download H2O, run the

wgetcommand with the link to the zip file available on our website by copying the link associated with the Download button for the selected H2O build.wget http://h2o-release.s3.amazonaws.com/h2o/master/3327/index.html unzip h2o-3.7.0.3327.zip cd h2o-3.7.0.3327 java -Xmx4g -jar h2o.jarFrom your browser, navigate to

<Private_IP_Address>:54321or<Public_DNS>:54321to use H2O’s web interface.

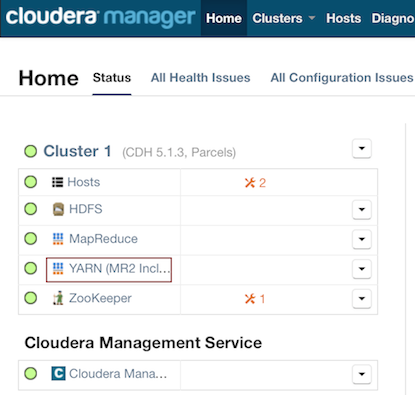

… On Hadoop

Currently supported versions:

- CDH 5.2

- CDH 5.3

- CDH 5.4.2

- HDP 2.1

- HDP 2.2

- HDP 2.3

- MapR 3.1.1

- MapR 4.0.1

- MapR 5.0

Important Points to Remember:

- The command used to launch H2O differs from previous versions (refer to the Tutorial section)

- Launching H2O on Hadoop requires at least 6 GB of memory

- Each H2O node runs as a mapper

- Run only one mapper per host

- There are no combiners or reducers

- Each H2O cluster must have a unique job name

-mapperXmx,-nodes, and-outputare required- Root permissions are not required - just unzip the H2O .zip file on any single node

Prerequisite: Open Communication Paths

H2O communicates using two communication paths. Verify these are open and available for use by H2O.

Path 1: mapper to driver

Optionally specify this port using the -driverport option in the hadoop jar command (see “Hadoop Launch Parameters” below). This port is opened on the driver host (the host where you entered the hadoop jar command). By default, this port is chosen randomly by the operating system.

Path 2: mapper to mapper

Optionally specify this port using the -baseport option in the hadoop jar command (refer to Hadoop Launch Parameters below. This port and the next subsequent port are opened on the mapper hosts (the Hadoop worker nodes) where the H2O mapper nodes are placed by the Resource Manager. By default, ports 54321 (TCP) and 54322 (TCP & UDP) are used.

The mapper port is adaptive: if 54321 and 54322 are not available, H2O will try 54323 and 54324 and so on. The mapper port is designed to be adaptive because sometimes if the YARN cluster is low on resources, YARN will place two H2O mappers for the same H2O cluster request on the same physical host. For this reason, we recommend opening a range of more than two ports (20 ports should be sufficient).

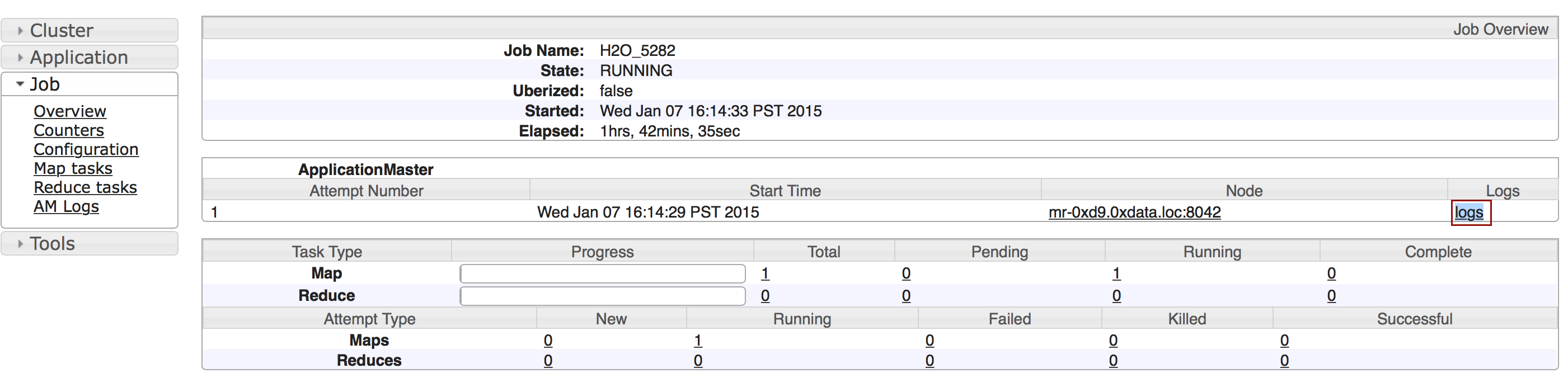

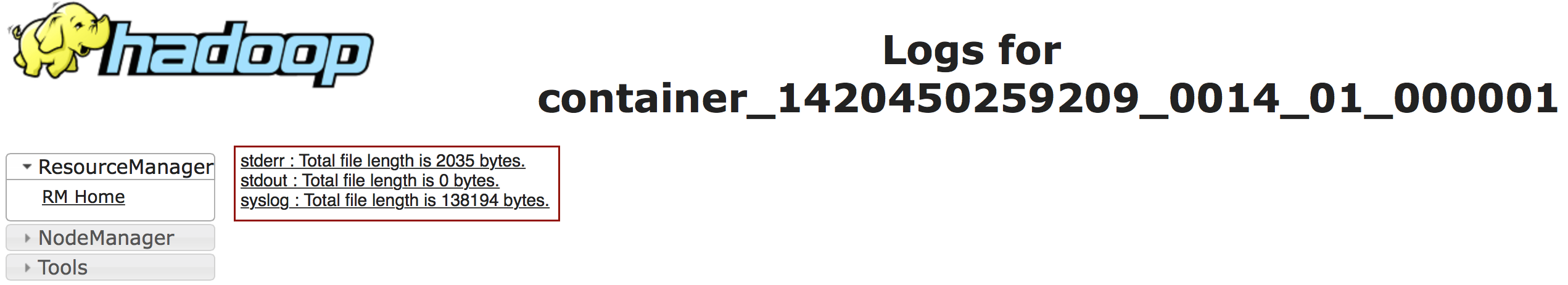

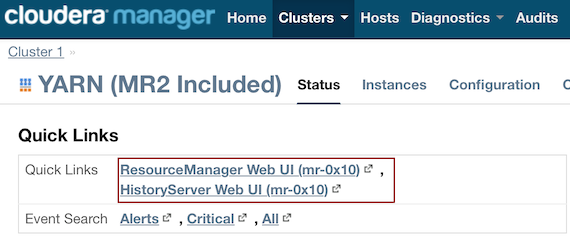

Tutorial

The following tutorial will walk the user through the download or build of H2O and the parameters involved in launching H2O from the command line.

Download the latest H2O release for your version of Hadoop:

wget http://h2o-release.s3.amazonaws.com/h2o/master/3327/h2o-3.7.0.3327-cdh5.2.zip wget http://h2o-release.s3.amazonaws.com/h2o/master/3327/h2o-3.7.0.3327-cdh5.3.zip wget http://h2o-release.s3.amazonaws.com/h2o/master/3327/h2o-3.7.0.3327-hdp2.1.zip wget http://h2o-release.s3.amazonaws.com/h2o/master/3327/h2o-3.7.0.3327-hdp2.2.zip wget http://h2o-release.s3.amazonaws.com/h2o/master/3327/h2o-3.7.0.3327-hdp2.3.zip wget http://h2o-release.s3.amazonaws.com/h2o/master/3327/h2o-3.7.0.3327-mapr3.1.1.zip wget http://h2o-release.s3.amazonaws.com/h2o/master/3327/h2o-3.7.0.3327-mapr4.0.1.zip wget http://h2o-release.s3.amazonaws.com/h2o/master/3327/h2o-3.7.0.3327-mapr5.0.zipNote: Enter only one of the above commands.

Prepare the job input on the Hadoop Node by unzipping the build file and changing to the directory with the Hadoop and H2O’s driver jar files.

unzip h2o-3.7.0.3327-*.zip cd h2o-3.7.0.3327-*To launch H2O nodes and form a cluster on the Hadoop cluster, run:

hadoop jar h2odriver.jar -nodes 1 -mapperXmx 6g -output hdfsOutputDirNameThe above command launches a 6g node of H2O. We recommend you launch the cluster with at least four times the memory of your data file size.

mapperXmx is the mapper size or the amount of memory allocated to each node. Specify at least 6 GB.

nodes is the number of nodes requested to form the cluster.

output is the name of the directory created each time a H2O cloud is created so it is necessary for the name to be unique each time it is launched.

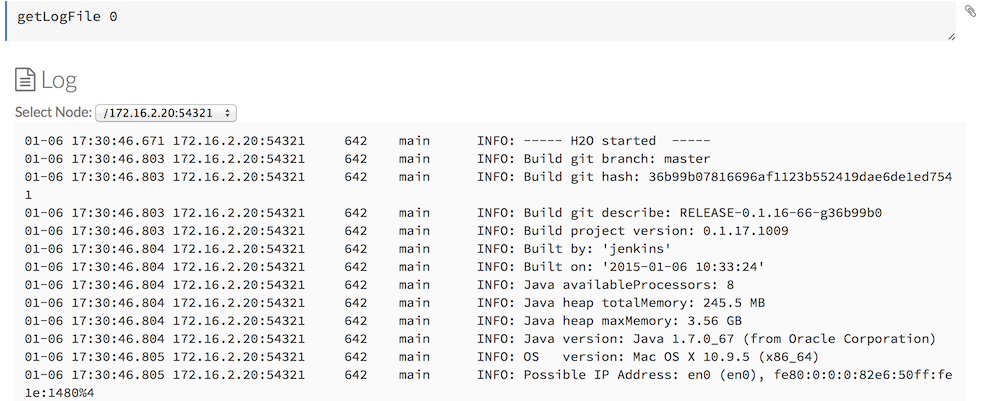

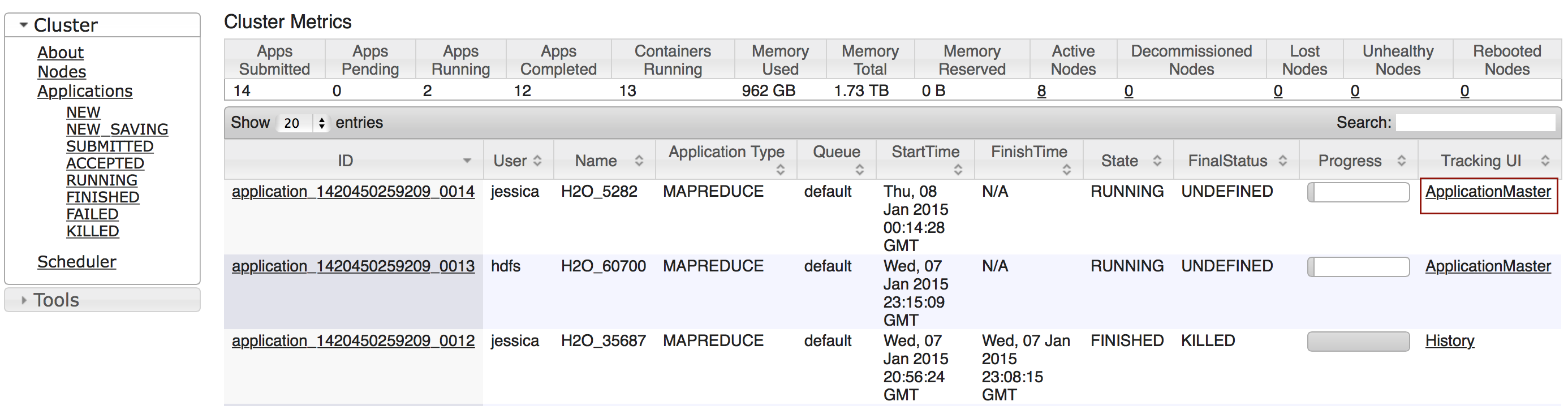

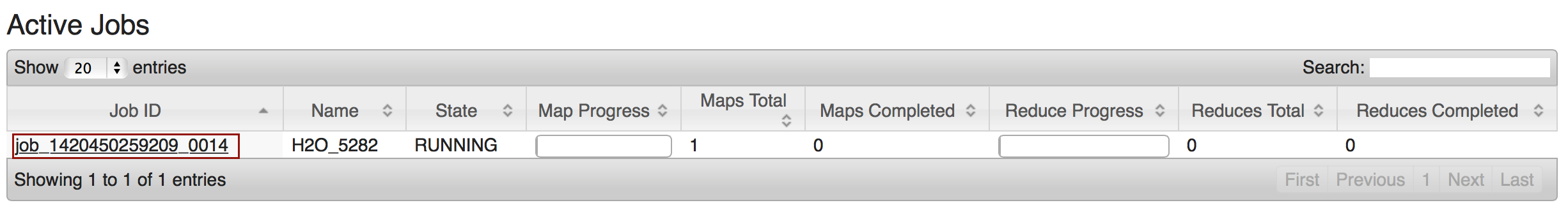

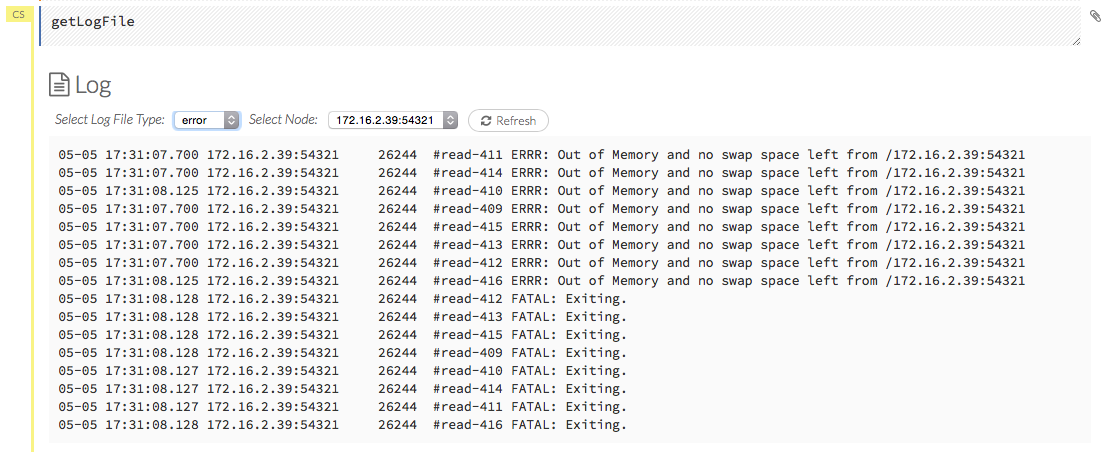

To monitor your job, direct your web browser to your standard job tracker Web UI. To access H2O’s Web UI, direct your web browser to one of the launched instances. If you are unsure where your JVM is launched, review the output from your command after the nodes has clouded up and formed a cluster. Any of the nodes’ IP addresses will work as there is no master node.

Determining driver host interface for mapper->driver callback... [Possible callback IP address: 172.16.2.181] [Possible callback IP address: 127.0.0.1] ... Waiting for H2O cluster to come up... H2O node 172.16.2.184:54321 requested flatfile Sending flatfiles to nodes... [Sending flatfile to node 172.16.2.184:54321] H2O node 172.16.2.184:54321 reports H2O cluster size 1 H2O cluster (1 nodes) is up Blocking until the H2O cluster shuts down...

Hadoop Launch Parameters

-h | -help: Display help-jobname <JobName>: Specify a job name for the Jobtracker to use; the default isH2O_nnnnn(where n is chosen randomly)-driverif <IP address of mapper -> driver callback interface>: Specify the IP address for callback messages from the mapper to the driver.-driverport <port of mapper -> callback interface>: Specify the port number for callback messages from the mapper to the driver.-network <IPv4Network1>[,<IPv4Network2>]: Specify the IPv4 network(s) to bind to the H2O nodes; multiple networks can be specified to force H2O to use the specified host in the Hadoop cluster.10.1.2.0/24allows 256 possibilities.-timeout <seconds>: Specify the timeout duration (in seconds) to wait for the cluster to form before failing. Note: The default value is 120 seconds; if your cluster is very busy, this may not provide enough time for the nodes to launch. If H2O does not launch, try increasing this value (for example,-timeout 600).-disown: Exit the driver after the cluster forms.-notify <notification file name>: Specify a file to write when the cluster is up. The file contains the IP and port of the embedded web server for one of the nodes in the cluster. All mappers must start before the H2O cloud is considered “up”.-mapperXmx <per mapper Java Xmx heap size>: Specify the amount of memory to allocate to H2O (at least 6g).-extramempercent <0-20>: Specify the extra memory for internal JVM use outside of the Java heap. This is a percentage ofmapperXmx.-n | -nodes <number of H2O nodes>: Specify the number of nodes.-nthreads <maximum number of CPUs>: Specify the number of CPUs to use. Enter-1to use all CPUs on the host, or enter a positive integer.-baseport <initialization port for H2O nodes>: Specify the initialization port for the H2O nodes. The default is54321.-ea: Enable assertions to verify boolean expressions for error detection.-verbose:gc: Include heap and garbage collection information in the logs.-XX:+PrintGCDetails: Include a short message after each garbage collection.-license <license file name>: Specify the directory of local filesytem location and the license file name.-o | -output <HDFS output directory>: Specify the HDFS directory for the output.-flow_dir <Saved Flows directory>: Specify the directory for saved flows. By default, H2O will try to find the HDFS home directory to use as the directory for flows. If the HDFS home directory is not found, flows cannot be saved unless a directory is specified using-flow_dir.

Accessing S3 Data from Hadoop

H2O launched on Hadoop can access S3 Data in addition to to HDFS. To enable access, follow the instructions below.

Edit Hadoop’s core-site.xml, then set the HADOOP_CONF_DIR environment property to the directory containing the core-site.xml file. For an example core-site.xml file, refer to Core-site.xml. Typically, the configuration directory for most Hadoop distributions is /etc/hadoop/conf.

You can also pass the S3 credentials when launching H2O with the Hadoop jar command. Use the -D flag to pass the credentials:

hadoop jar h2odriver.jar -Dfs.s3.awsAccessKeyId="${AWS_ACCESS_KEY}" -Dfs.s3n.awsSecretAccessKey="${AWS_SECRET_KEY}" -n 3 -mapperXmx 10g -output outputDirectory

where AWS_ACCESS_KEY represents your user name and AWS_SECRET_KEY represents your password.

Then import the data with the S3 URL path:

To import the data from the Flow API:

importFiles [ "s3n:/path/to/bucket/file/file.tab.gz" ]To import the data from the R API:

h2o.importFile(path = "s3n://bucket/path/to/file.csv")To import the data from the Python API:

h2o.import_frame(path = "s3n://bucket/path/to/file.csv")

… Using Docker

This walkthrough describes:

- Installing Docker on Mac or Linux OS

- Creating and modifying the Dockerfile

- Building a Docker image from the Dockerfile

- Running the Docker build

- Launching H2O

- Accessing H2O from the web browser or R

Walkthrough

Prerequisites

Linux kernel version 3.8+

or

Mac OS X 10.6+

- VirtualBox

- Latest version of Docker is installed and configured

- Docker daemon is running - enter all commands below in the Docker daemon window

- Using

Userdirectory (notroot)

Notes

- Older Linux kernel versions are known to cause kernel panics that break Docker; there are ways around it, but these should be attempted at your own risk. To check the version of your kernel, run

uname -rat the command prompt. The following walkthrough has been tested on a Mac OS X 10.10.1. - The Dockerfile always pulls the latest H2O release.

- The Docker image only needs to be built once.

Step 1 - Install and Launch Docker

Depending on your OS, select the appropriate installation method:

Step 2 - Create or Download Dockerfile

Note: If the following commands do not work, prepend with

sudo.

Create a folder on the Host OS to host your Dockerfile by running:

mkdir -p /data/h2o-master

Next, either download or create a Dockerfile, which is a build recipe that builds the container.

Download and use our Dockerfile template by running:

cd /data/h2o-master

wget https://raw.githubusercontent.com/h2oai/h2o-3/master/Dockerfile

The Dockerfile:

- obtains and updates the base image (Ubuntu 14.04)

- installs Java 7

- obtains and downloads the H2O build from H2O’s S3 repository

- exposes ports 54321 and 54322 in preparation for launching H2O on those ports

Step 3 - Build Docker image from Dockerfile

From the /data/h2o-master directory, run:

docker build -t "h2oai/master:v5" .

Note:

v5represents the current version number.

Because it assembles all the necessary parts for the image, this process can take a few minutes.

Step 4 - Run Docker Build

On a Mac, use the argument -p 54321:54321 to expressly map the port 54321. This is not necessary on Linux.

docker run -ti -p 54321:54321 h2o.ai/master:v5 /bin/bash

Note:

v5represents the version number.

Step 5 - Launch H2O

Navigate to the /opt directory and launch H2O. Change the value of -Xmx to the amount of memory you want to allocate to the H2O instance. By default, H2O launches on port 54321.

cd /opt

java -Xmx1g -jar h2o.jar

Step 6 - Access H2O from the web browser or R

- On Linux: After H2O launches, copy and paste the IP address and port of the H2O instance into the address bar of your browser. In the following example, the IP is

172.17.0.5:54321.

03:58:25.963 main INFO WATER: Cloud of size 1 formed [/172.17.0.5:54321 (00:00:00.000)]

- On OSX: Locate the IP address of the Docker’s network (

192.168.59.103in the following examples) that bridges to your Host OS by opening a new Terminal window (not a bash for your container) and runningboot2docker ip.

$ boot2docker ip

192.168.59.103

You can also view the IP address (192.168.99.100 in the example below) by scrolling to the top of the Docker daemon window:

## .

## ## ## ==

## ## ## ## ## ===

/"""""""""""""""""\___/ ===

~~~ {~~ ~~~~ ~~~ ~~~~ ~~~ ~ / ===- ~~~

\______ o __/

\ \ __/

\____\_______/

docker is configured to use the default machine with IP 192.168.99.100

For help getting started, check out the docs at https://docs.docker.com

After obtaining the IP address, point your browser to the specified ip address and port. In R, you can access the instance by installing the latest version of the H2O R package and running:

library(h2o)

dockerH2O <- h2o.init(ip = "192.168.59.103", port = 54321)

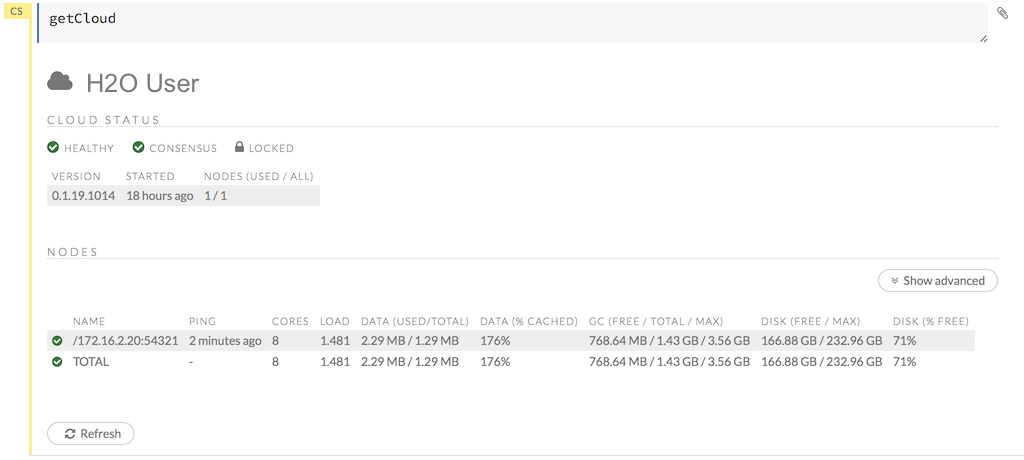

Flow Web UI …

H2O Flow is an open-source user interface for H2O. It is a web-based interactive environment that allows you to combine code execution, text, mathematics, plots, and rich media in a single document.

With H2O Flow, you can capture, rerun, annotate, present, and share your workflow. H2O Flow allows you to use H2O interactively to import files, build models, and iteratively improve them. Based on your models, you can make predictions and add rich text to create vignettes of your work - all within Flow’s browser-based environment.

Flow’s hybrid user interface seamlessly blends command-line computing with a modern graphical user interface. However, rather than displaying output as plain text, Flow provides a point-and-click user interface for every H2O operation. It allows you to access any H2O object in the form of well-organized tabular data.

H2O Flow sends commands to H2O as a sequence of executable cells. The cells can be modified, rearranged, or saved to a library. Each cell contains an input field that allows you to enter commands, define functions, call other functions, and access other cells or objects on the page. When you execute the cell, the output is a graphical object, which can be inspected to view additional details.

While H2O Flow supports REST API, R scripts, and CoffeeScript, no programming experience is required to run H2O Flow. You can click your way through any H2O operation without ever writing a single line of code. You can even disable the input cells to run H2O Flow using only the GUI. H2O Flow is designed to guide you every step of the way, by providing input prompts, interactive help, and example flows.

Introduction

This guide will walk you through how to use H2O’s web UI, H2O Flow. To view a demo video of H2O Flow, click here.

Getting Help

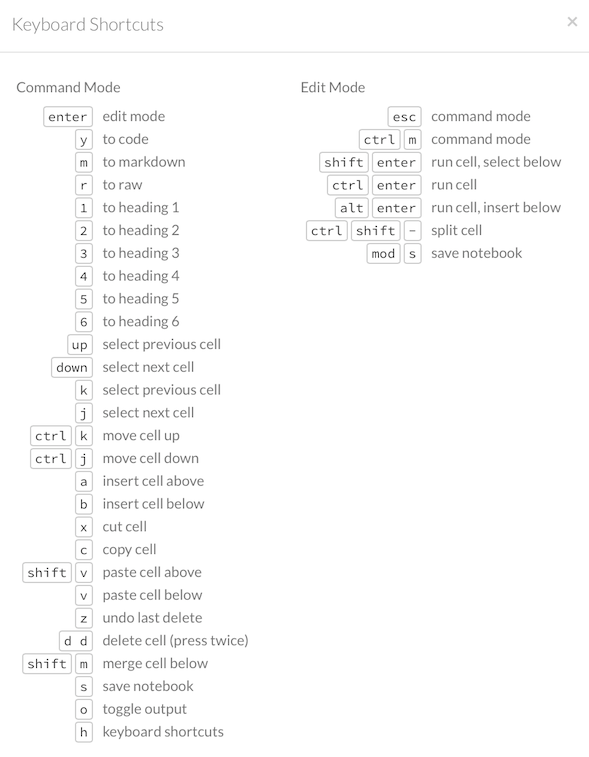

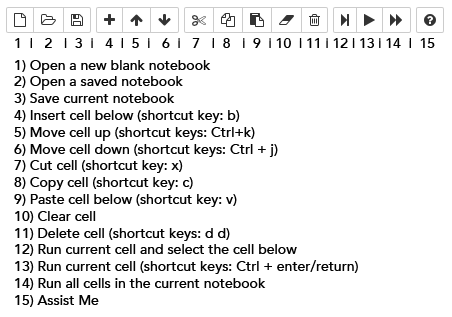

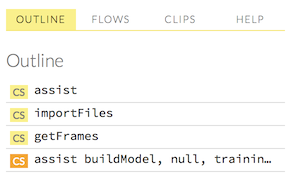

First, let’s go over the basics. Type h to view a list of helpful shortcuts.

The following help window displays:

To close this window, click the X in the upper-right corner, or click the Close button in the lower-right corner. You can also click behind the window to close it. You can also access this list of shortcuts by clicking the Help menu and selecting Keyboard Shortcuts.

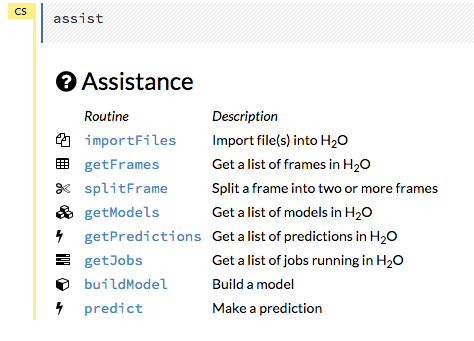

For additional help, click Help > Assist Me or click the Assist Me! button in the row of buttons below the menus.

You can also type assist in a blank cell and press Ctrl+Enter. A list of common tasks displays to help you find the correct command.

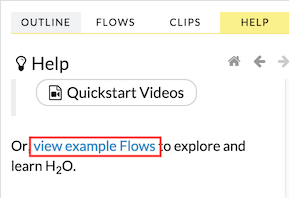

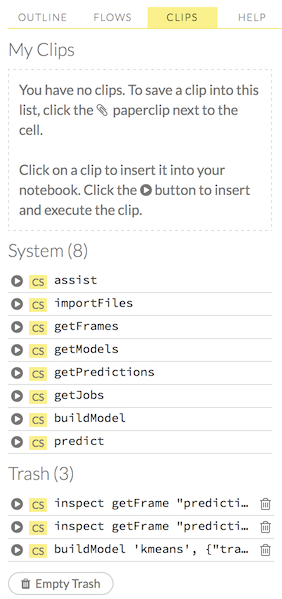

There are multiple resources to help you get started with Flow in the Help sidebar.

Note: To hide the sidebar, click the >> button above it.

To display the sidebar if it is hidden, click the << button.

To access this documentation, select the Flow Web UI… link below the General heading in the Help sidebar.

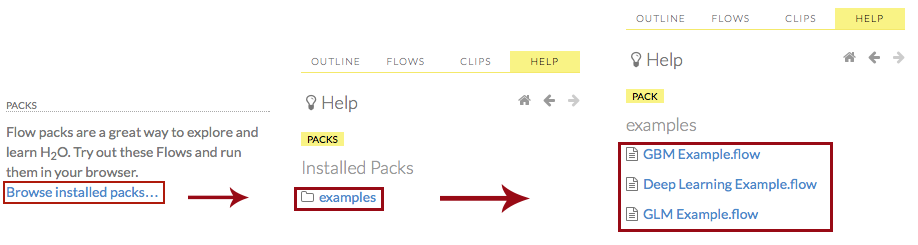

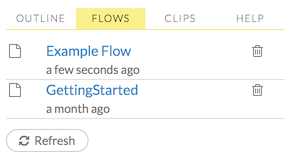

You can also explore the pre-configured flows available in H2O Flow for a demonstration of how to create a flow. To view the example flows:

Click the view example Flows link below the Quickstart Videos button in the Help sidebar

or

Click the Browse installed packs… link in the Packs subsection of the Help sidebar. Click the examples folder and select the example flow from the list.

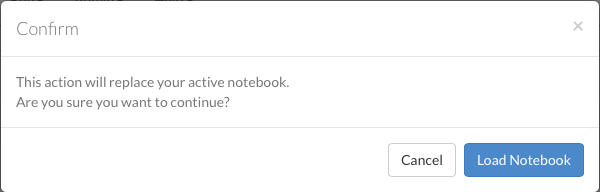

If you have a flow currently open, a confirmation window appears asking if the current notebook should be replaced. To load the example flow, click the Load Notebook button.

To view the REST API documentation, click the Help tab in the sidebar and then select the type of REST API documentation (Routes or Schemas).

Before getting started with H2O Flow, make sure you understand the different cell modes. Certain actions can only be performed when the cell is in a specific mode.

Understanding Cell Modes

- Using Edit Mode

- Using Command Mode

- Changing Cell Formats

- Running Cells

- Running Flows

- Using Keyboard Shortcuts

- Using Variables in Cells

- Using Flow Buttons

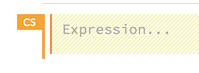

There are two modes for cells: edit and command.

Using Edit Mode

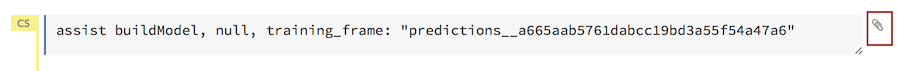

In edit mode, the cell is yellow with a blinking bar to indicate where text can be entered and there is an orange flag to the left of the cell.

Using Command Mode

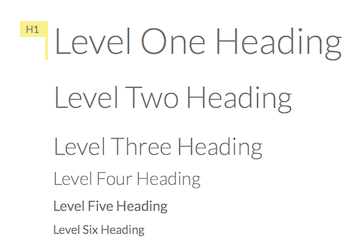

In command mode, the flag is yellow. The flag also indicates the cell’s format:

MD: Markdown

Note: Markdown formatting is not applied until you run the cell by:

clicking the Run button

or

orpressing Ctrl+Enter

CS: Code (default)

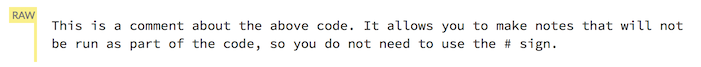

RAW: Raw format (for code comments)

H[1-6]: Heading level (where 1 is a first-level heading)

NOTE: If there is an error in the cell, the flag is red.

If the cell is executing commands, the flag is teal. The flag returns to yellow when the task is complete.

Changing Cell Formats

To change the cell’s format (for example, from code to Markdown), make sure you are in command (not edit) mode and that the cell you want to change is selected. The easiest way to do this is to click on the flag to the left of the cell. Enter the keyboard shortcut for the format you want to use. The flag’s text changes to display the current format.

| Cell Mode | Keyboard Shortcut |

|---|---|

| Code | y |

| Markdown | m |

| Raw text | r |

| Heading 1 | 1 |

| Heading 2 | 2 |

| Heading 3 | 3 |

| Heading 4 | 4 |

| Heading 5 | 5 |

| Heading 6 | 6 |

Running Cells

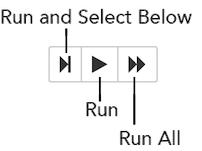

The series of buttons at the top of the page below the menus run cells in a flow.

- To run all cells in the flow, click the Flow menu, then click Run All Cells.

- To run the current cell and all subsequent cells, click the Flow menu, then click Run All Cells Below.

To run an individual cell in a flow, confirm the cell is in Edit Mode, then:

press Ctrl+Enter

or

click the Run button

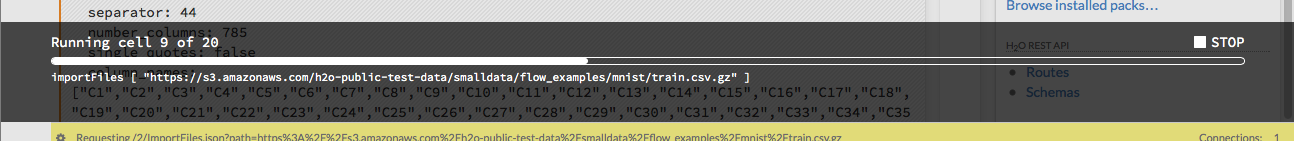

Running Flows

When you run the flow, a progress bar indicates the current status of the flow. You can cancel the currently running flow by clicking the Stop button in the progress bar.

When the flow is complete, a message displays in the upper right.

Note: If there is an error in the flow, H2O Flow stops at the cell that contains the error.

Using Keyboard Shortcuts

Here are some important keyboard shortcuts to remember:

- Click a cell and press Enter to enter edit mode, which allows you to change the contents of a cell.

- To exit edit mode, press Esc.

- To execute the contents of a cell, press the Ctrl and Enter buttons at the same time.

The following commands must be entered in command mode.

- To add a new cell above the current cell, press a.

- To add a new cell below the current cell, press b.

- To delete the current cell, press the d key twice. (dd).

You can view these shortcuts by clicking Help > Keyboard Shortcuts or by clicking the Help tab in the sidebar.

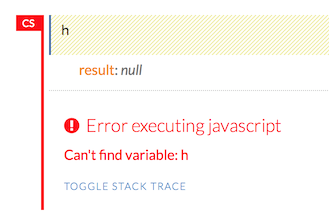

Using Variables in Cells

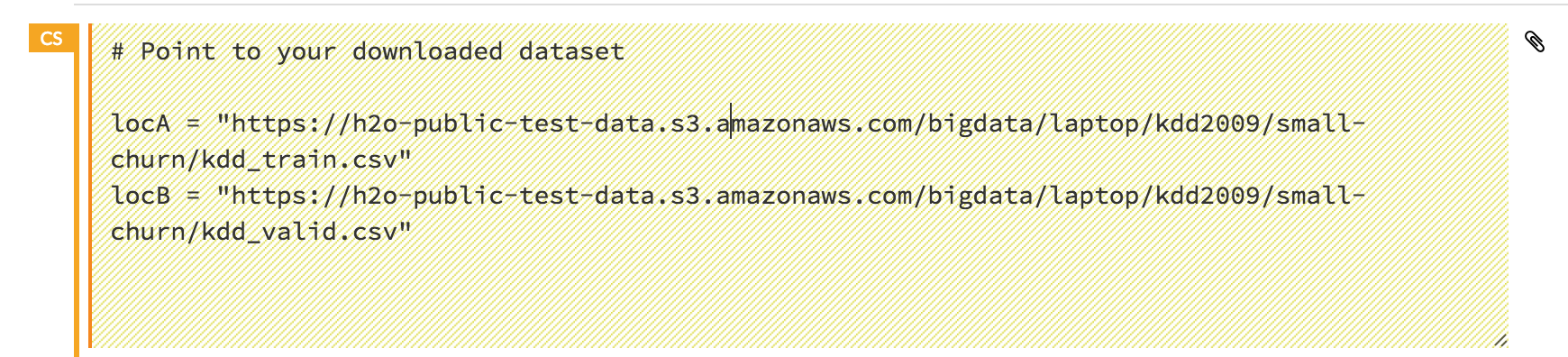

Variables can be used to store information such as download locations. To use a variable in Flow:

- Define the variable in a code cell (for example,

locA = "https://h2o-public-test-data.s3.amazonaws.com/bigdata/laptop/kdd2009/small-churn/kdd_train.csv").

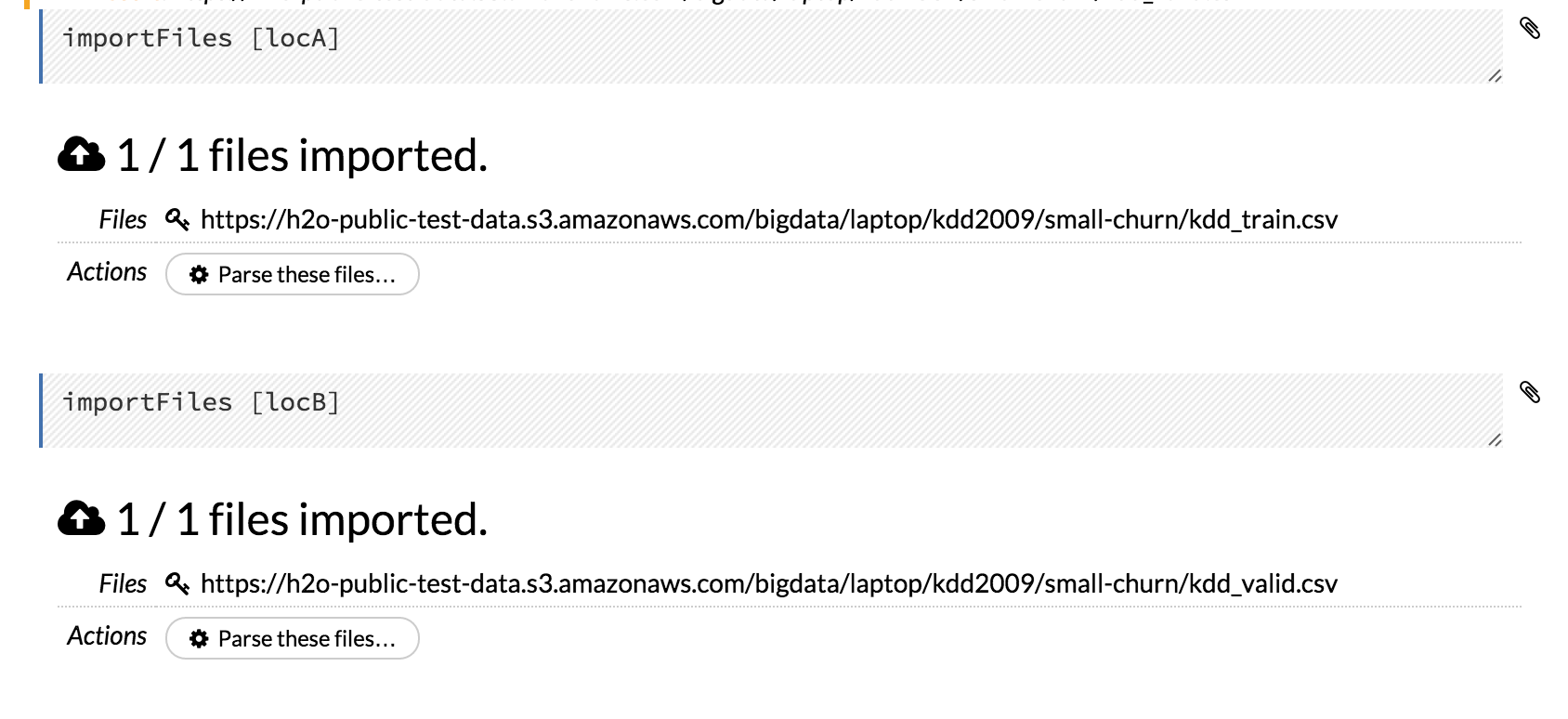

- Run the cell. H2O validates the variable.

- Use the variable in another code cell (for example,

importFiles [locA]). To further simplify your workflow, you can save the cells containing the variables and definitions as clips.

To further simplify your workflow, you can save the cells containing the variables and definitions as clips.

Using Flow Buttons

There are also a series of buttons at the top of the page below the flow name that allow you to save the current flow, add a new cell, move cells up or down, run the current cell, and cut, copy, or paste the current cell. If you hover over the button, a description of the button’s function displays.

You can also use the menus at the top of the screen to edit the order of the cells, toggle specific format types (such as input or output), create models, or score models. You can also access troubleshooting information or obtain help with Flow.

Note: To disable the code input and use H2O Flow strictly as a GUI, click the Cell menu, then Toggle Cell Input.

Now that you are familiar with the cell modes, let’s import some data.

… Importing Data

If you don’t have any data of your own to work with, you can find some example datasets here:

There are multiple ways to import data in H2O flow:

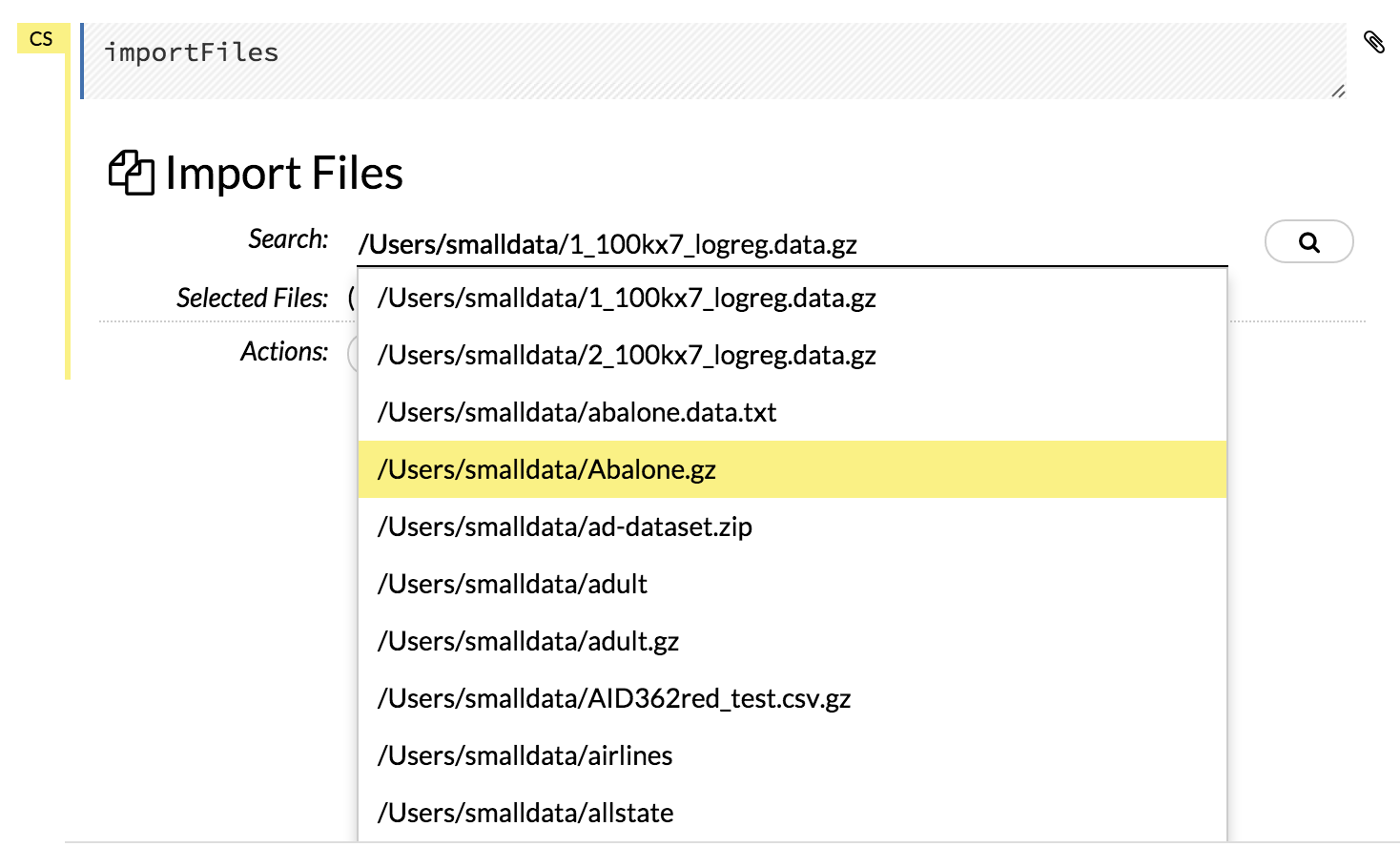

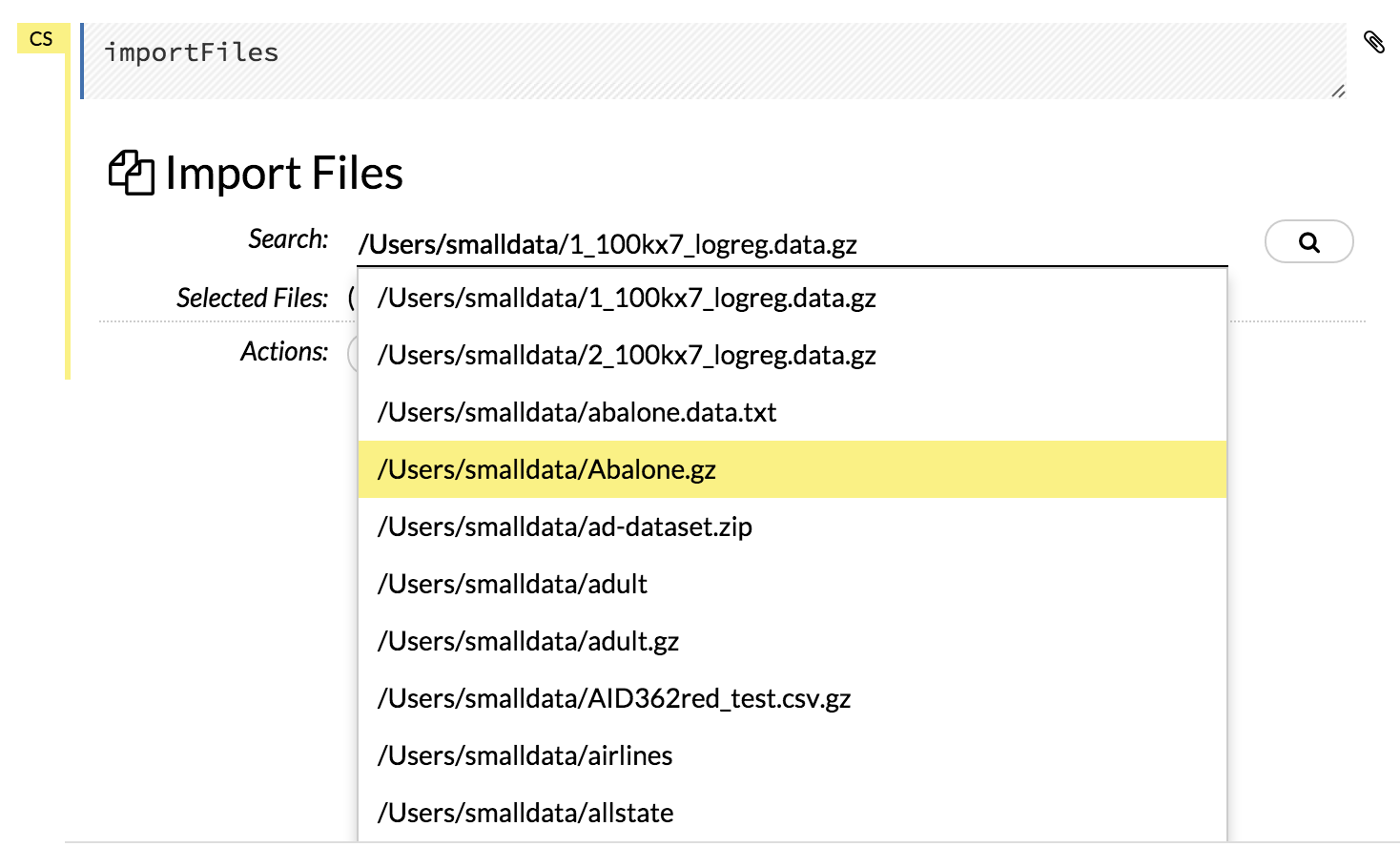

Click the Assist Me! button in the row of buttons below the menus, then click the importFiles link. Enter the file path in the auto-completing Search entry field and press Enter. Select the file from the search results and confirm it by clicking the Add All link.

In a blank cell, select the CS format, then enter

importFiles ["path/filename.format"](wherepath/filename.formatrepresents the complete file path to the file, including the full file name. The file path can be a local file path or a website address.Note: For S3 file locations, use the format

importFiles [ "s3n:/path/to/bucket/file/file.tab.gz" ]For an example of how to import a single file or a directory in R, refer to the following example.

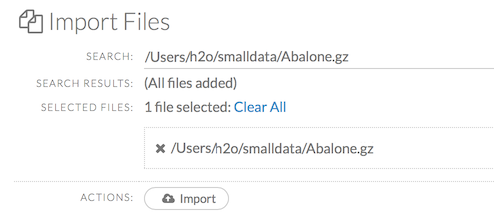

After selecting the file to import, the file path displays in the “Search Results” section. To import a single file, click the plus sign next to the file. To import all files in the search results, click the Add all link. The files selected for import display in the “Selected Files” section.

Note: If the file is compressed, it will only be read using a single thread. For best performance, we recommend uncompressing the file before importing, as this will allow use of the faster multithreaded distributed parallel reader during import. Please note that .zip files containing multiple files are not currently supported.

To import the selected file(s), click the Import button.

To remove all files from the “Selected Files” list, click the Clear All link.

To remove a specific file, click the X next to the file path.

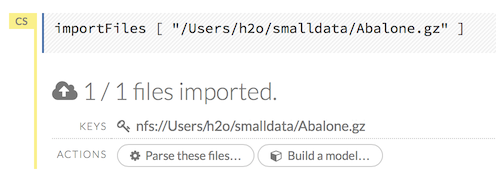

After you click the Import button, the raw code for the current job displays. A summary displays the results of the file import, including the number of imported files and their Network File System (nfs) locations.

Uploading Data

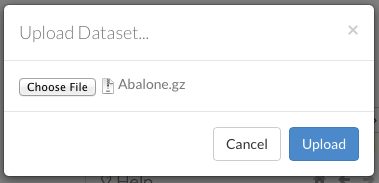

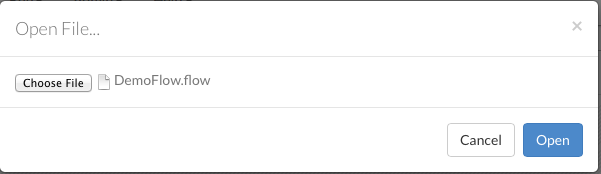

To upload a local file, click the Data menu and select Upload File…. Click the Choose File button, select the file, click the Choose button, then click the Upload button.

When the file has uploaded successfully, a message displays in the upper right and the Setup Parse cell displays.

Ok, now that your data is available in H2O Flow, let’s move on to the next step: parsing. Click the Parse these files button to continue.

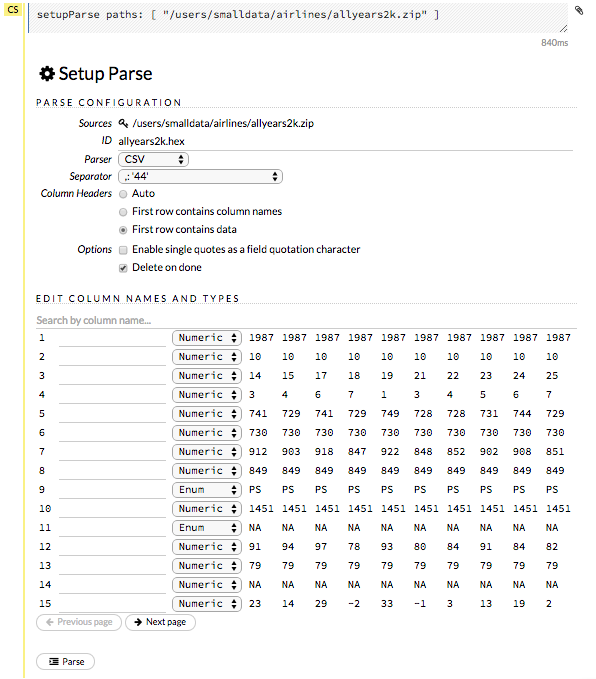

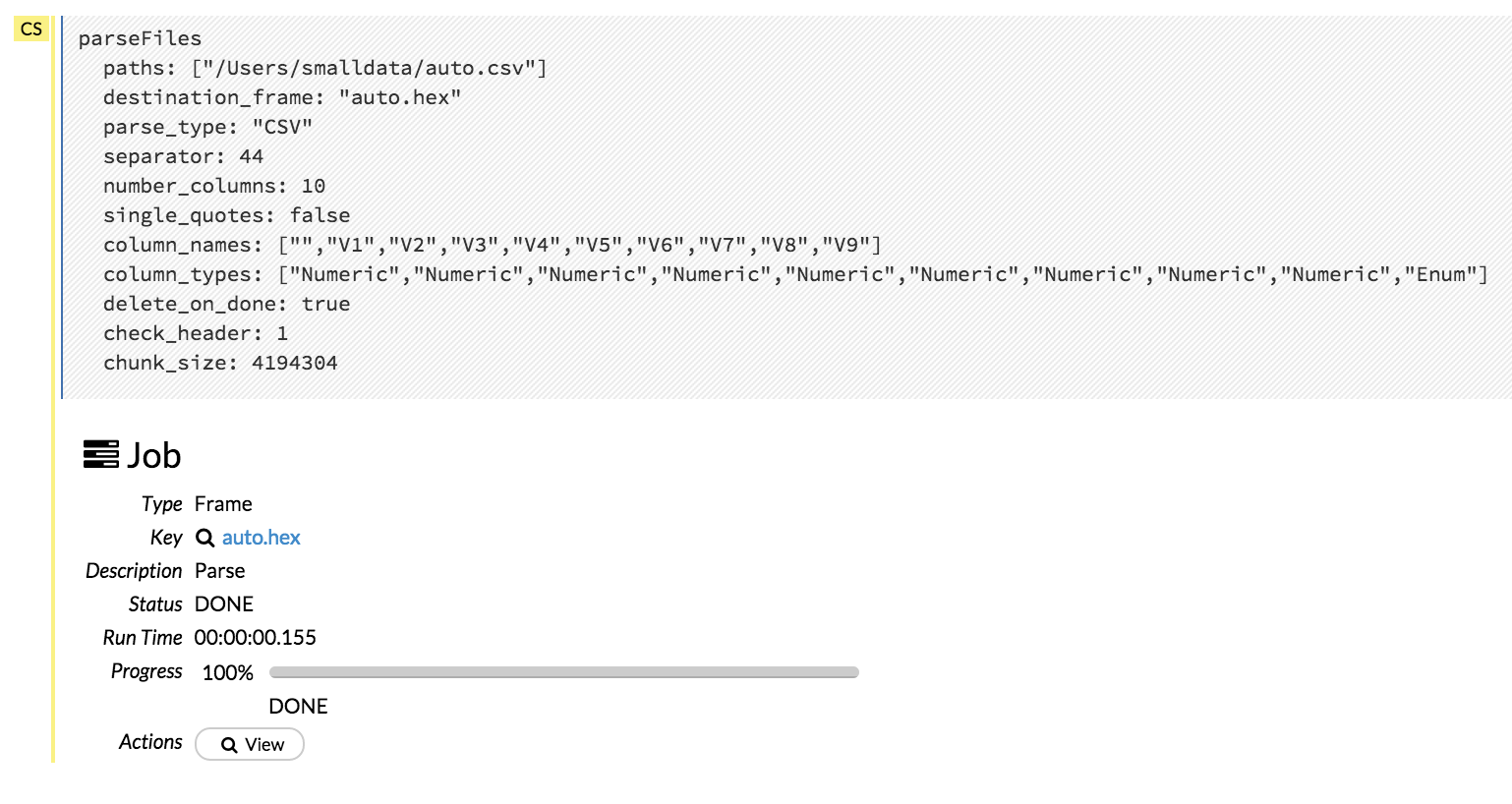

Parsing Data

After you have imported your data, parse the data.

The read-only Sources field displays the file path for the imported data selected for parsing.

The ID contains the auto-generated name for the parsed data (by default, the file name of the imported file with .hex as the file extension). Use the default name or enter a custom name in this field.

Select the parser type (if necessary) from the drop-down Parser list. For most data parsing, H2O automatically recognizes the data type, so the default settings typically do not need to be changed. The following options are available:

- Auto

- ARFF

- XLS

- XLSX

- CSV

SVMLight

Note: For SVMLight data, the column indices must be >= 1 and the columns must be in ascending order.

If a separator or delimiter is used, select it from the Separator list.

Select a column header option, if applicable:

- Auto: Automatically detect header types.

- First row contains column names: Specify heading as column names.

- First row contains data: Specify heading as data. This option is selected by default.

Select any necessary additional options:

- Enable single quotes as a field quotation character: Treat single quote marks (also known as apostrophes) in the data as a character, rather than an enum. This option is not selected by default.

- Delete on done: Check this checkbox to delete the imported data after parsing. This option is selected by default.

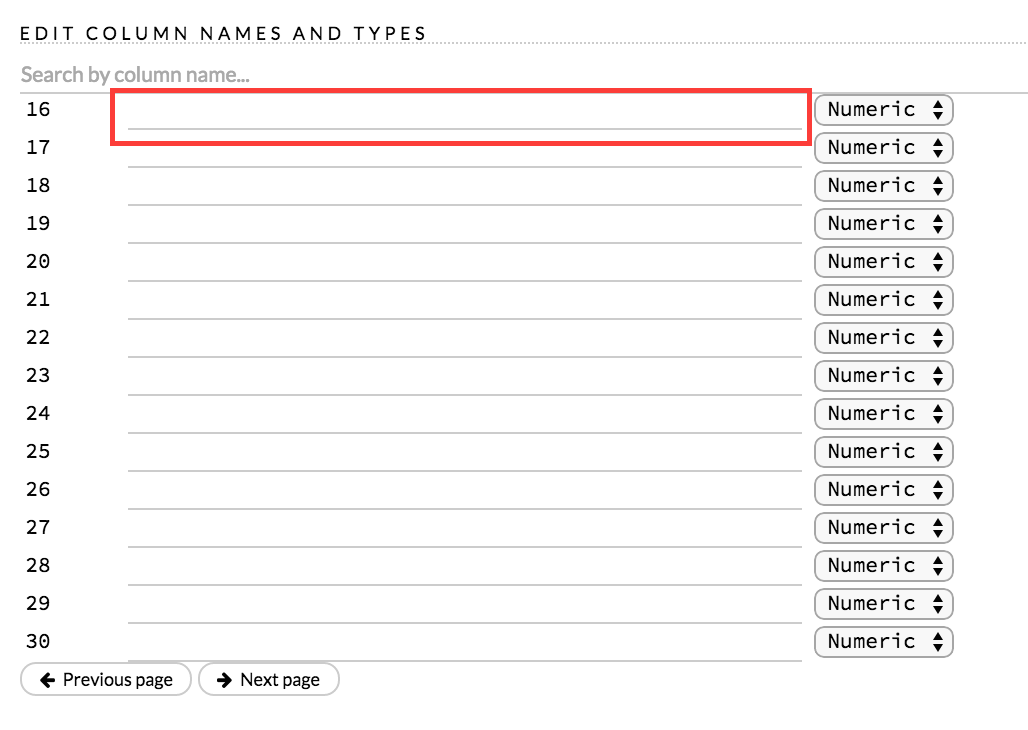

A preview of the data displays in the “Edit Column Names and Types” section.

To change or add a column name, edit or enter the text in the column’s entry field. In the screenshot below, the entry field for column 16 is highlighted in red.

To change the column type, select the drop-down list to the right of the column name entry field and select the data type. The options are:

- Unknown

- Numeric

- Enum

- Time

- UUID

- String

- Invalid

You can search for a column by entering it in the Search by column name… entry field above the first column name entry field. As you type, H2O displays the columns that match the specified search terms.

Note: Only custom column names are searchable. Default column names cannot be searched.

To navigate the data preview, click the <- Previous page or -> Next page buttons.

After making your selections, click the Parse button.

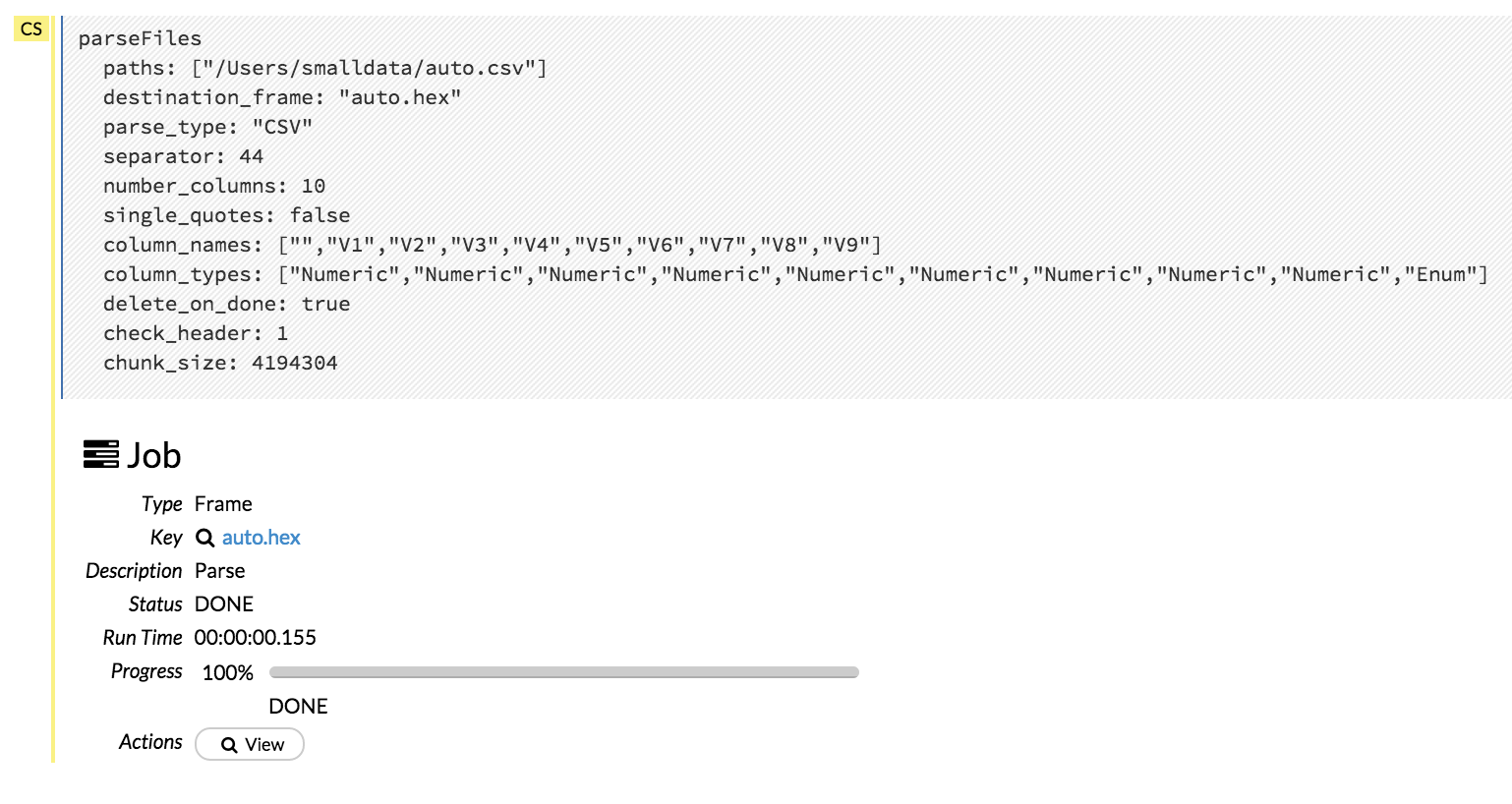

After you click the Parse button, the code for the current job displays.

Since we’ve submitted a couple of jobs (data import & parse) to H2O now, let’s take a moment to learn more about jobs in H2O.

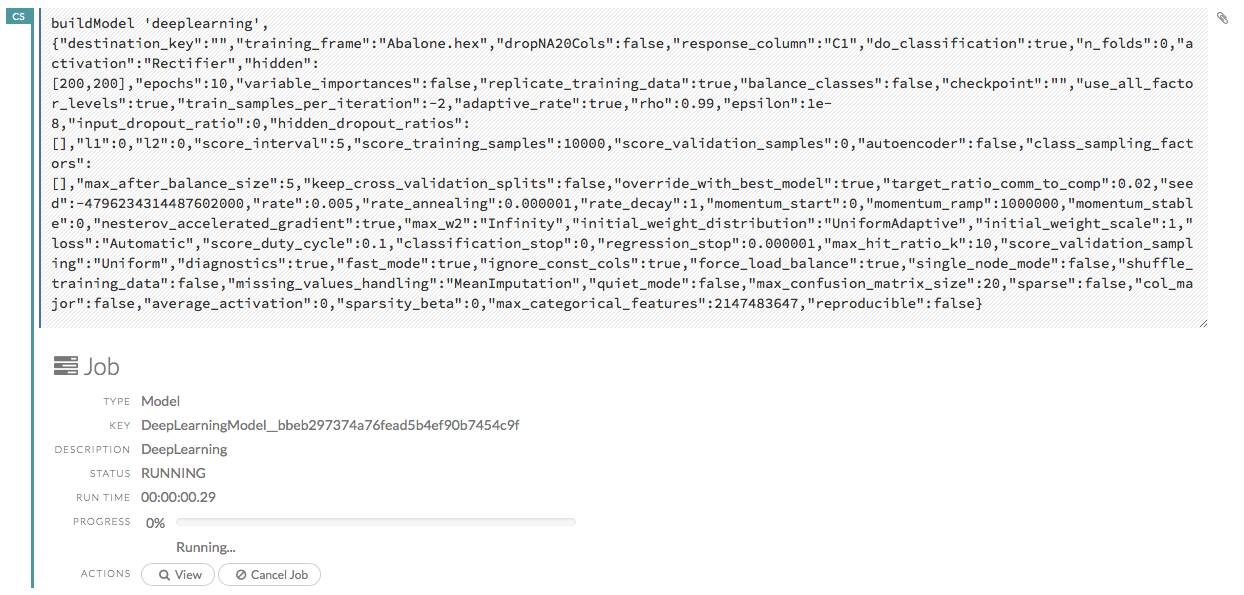

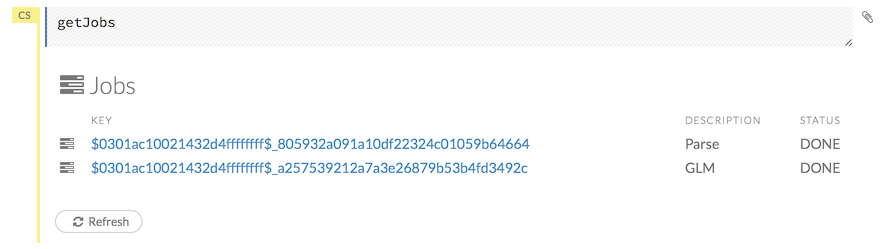

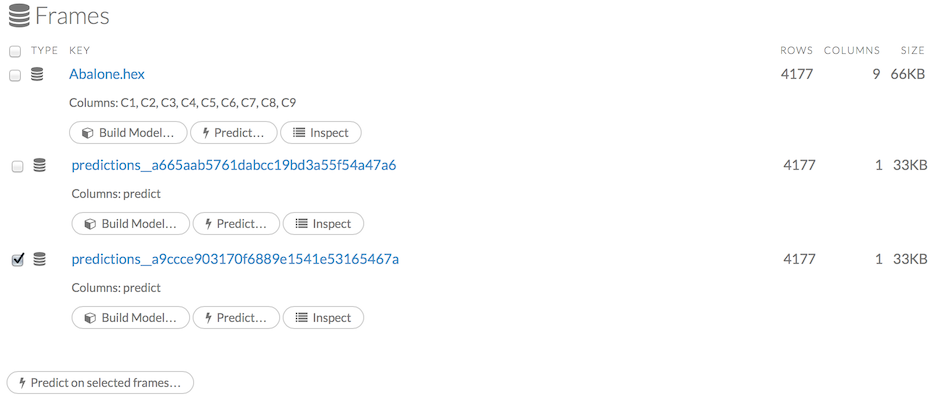

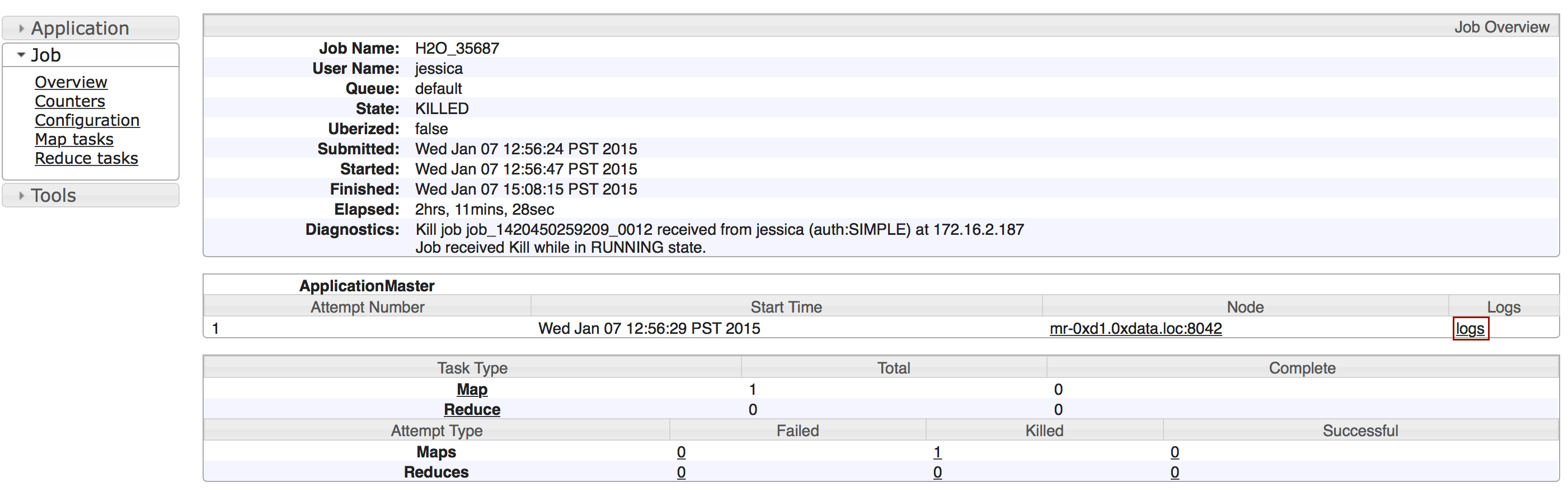

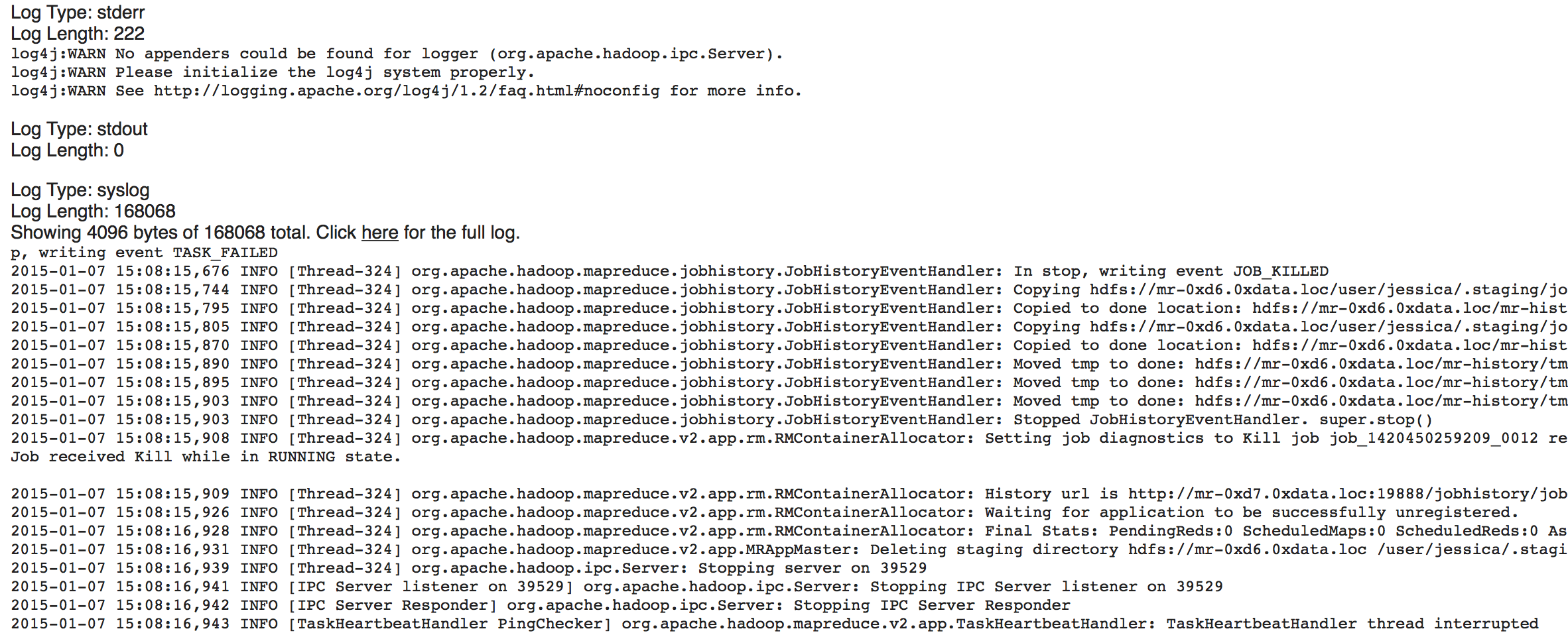

Viewing Jobs

Any command (such as importFiles) you enter in H2O is submitted as a job, which is associated with a key. The key identifies the job within H2O and is used as a reference.

Viewing All Jobs

To view all jobs, click the Admin menu, then click Jobs, or enter getJobs in a cell in CS mode.

The following information displays:

- Type (for example,

FrameorModel) - Link to the object

- Description of the job type (for example,

ParseorGBM) - Start time

- End time

- Run time

To refresh this information, click the Refresh button. To view the details of the job, click the View button.

Viewing Specific Jobs

To view a specific job, click the link in the “Destination” column.

The following information displays:

- Type (for example,

Frame) - Link to object (key)

- Description (for example,

Parse) - Status

- Run time

- Progress

NOTE: For a better understanding of how jobs work, make sure to review the Viewing Frames section as well.

Ok, now that you understand how to find jobs in H2O, let’s submit a new one by building a model.

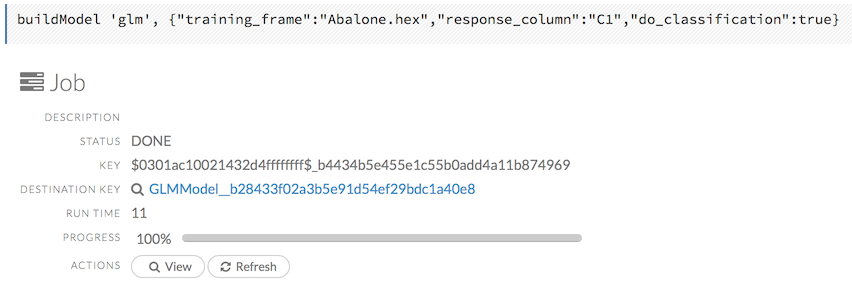

… Building Models

To build a model:

Click the Assist Me! button in the row of buttons below the menus and select buildModel

or

Click the Assist Me! button, select getFrames, then click the Build Model… button below the parsed .hex data set

or

Click the View button after parsing data, then click the Build Model button

or

Click the drop-down Model menu and select the model type from the list

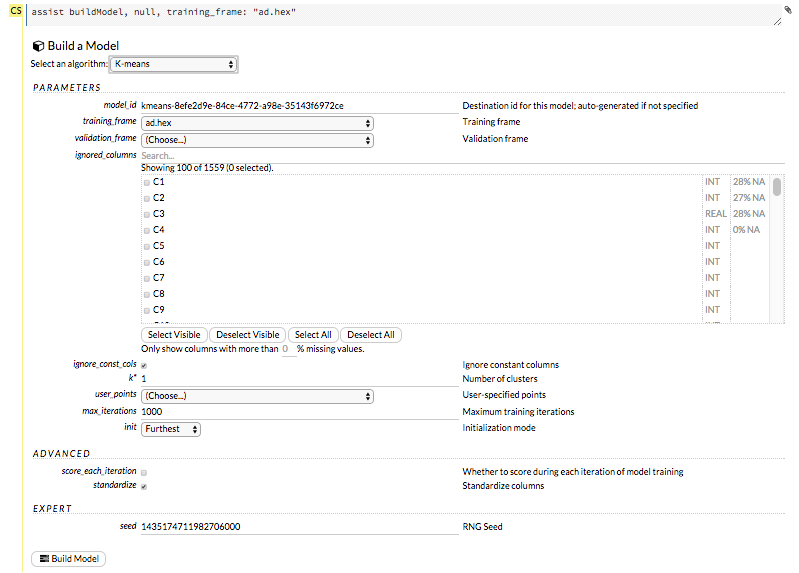

The Build Model… button can be accessed from any page containing the .hex key for the parsed data (for example, getJobs > getFrame). The following image depicts the K-Means model type. Available options vary depending on model type.

In the Build a Model cell, select an algorithm from the drop-down menu:

- K-means: Create a K-Means model.

- Generalized Linear Model: Create a Generalized Linear model.

- Distributed RF: Create a distributed Random Forest model.

- Naïve Bayes: Create a Naïve Bayes model.

- Principal Component Analysis: Create a Principal Components Analysis model for modeling without regularization or performing dimensionality reduction.

- Gradient Boosting Machine: Create a Gradient Boosted model

- Deep Learning: Create a Deep Learning model.

The available options vary depending on the selected model. If an option is only available for a specific model type, the model type is listed. If no model type is specified, the option is applicable to all model types.

model_id: (Optional) Enter a custom name for the model to use as a reference. By default, H2O automatically generates an ID containing the model type (for example,

gbm-6f6bdc8b-ccbc-474a-b590-4579eea44596).training_frame: (Required) Select the dataset used to build the model.

validation_frame: (Optional) Select the dataset used to evaluate the accuracy of the model.

nfolds: (GLM, GBM, DL, DRF) Specify the number of folds for cross-validation.

response_column: (Required for GLM, GBM, DL, DRF, Naïve Bayes) Select the column to use as the independent variable.

ignored_columns: (Optional) Click the checkbox next to a column name to add it to the list of columns excluded from the model. To add all columns, click the All button. To remove a column from the list of ignored columns, click the X next to the column name. To remove all columns from the list of ignored columns, click the None button. To search for a specific column, type the column name in the Search field above the column list. To only show columns with a specific percentage of missing values, specify the percentage in the Only show columns with more than 0% missing values field. To change the selections for the hidden columns, use the Select Visible or Deselect Visible buttons.

ignore_const_cols: (Optional) Check this checkbox to ignore constant training columns, since no information can be gained from them. This option is selected by default.

transform: (PCA) Select the transformation method for the training data: None, Standardize, Normalize, Demean, or Descale.

pca_method: (PCA) Select the algorithm to use for computing the principal components:

- GramSVD: Uses a distributed computation of the Gram matrix, followed by a local SVD using the JAMA package

- Power: Computes the SVD using the power iteration method

- Randomized: Uses randomized subspace iteration method

- GLRM: Fits a generalized low-rank model with L2 loss function and no regularization and solves for the SVD using local matrix algebra

family: (GLM) Select the model type (Gaussian, Binomial, Multinomial, Poisson, Gamma, or Tweedie).

solver: (GLM) Select the solver to use (AUTO, IRLSM, L_BFGS, COORDINATE_DESCENT_NAIVE, or COORDINATE_DESCENT). IRLSM is fast on on problems with a small number of predictors and for lambda-search with L1 penalty, while L_BFGS scales better for datasets with many columns. COORDINATE_DESCENT is IRLSM with the covariance updates version of cyclical coordinate descent in the innermost loop. COORDINATE_DESCENT_NAIVE is IRLSM with the naive updates version of cyclical coordinate descent in the innermost loop. COORDINATE_DESCENT_NAIVE and COORDINATE_DESCENT are currently experimental.

link: (GLM) Select a link function (Identity, Family_Default, Logit, Log, Inverse, or Tweedie).

alpha: (GLM) Specify the regularization distribution between L2 and L2.

lambda: (GLM) Specify the regularization strength.

lambda_search: (GLM) Check this checkbox to enable lambda search, starting with lambda max. The given lambda is then interpreted as lambda min.

non-negative: (GLM) To force coefficients to be non-negative, check this checkbox.

standardize: (K-Means, GLM) To standardize the numeric columns to have mean of zero and unit variance, check this checkbox. Standardization is highly recommended; if you do not use standardization, the results can include components that are dominated by variables that appear to have larger variances relative to other attributes as a matter of scale, rather than true contribution. This option is selected by default.

beta_constraints: (GLM) To use beta constraints, select a dataset from the drop-down menu. The selected frame is used to constraint the coefficient vector to provide upper and lower bounds.

min_rows: (GBM, DRF) Specify the minimum number of observations for a leaf (“nodesize” in R).

nbins: (GBM, DRF) (Numerical [real/int] only) Specify the minimum number of bins for the histogram to build, then split at the best point.

nbins_cats: (GBM, DRF) (Categorical [factors/enums] only) Specify the maximum number of bins for the histogram to build, then split at the best point. Higher values can lead to more overfitting. The levels are ordered alphabetically; if there are more levels than bins, adjacent levels share bins. This value has a more significant impact on model fitness than nbins. Larger values may increase runtime, especially for deep trees and large clusters, so tuning may be required to find the optimal value for your configuration.

learn_rate: (GBM) Specify the learning rate. The range is 0.0 to 1.0.

distribution: (GBM, DL) Select the distribution type from the drop-down list. The options are auto, bernoulli, multinomial, gaussian, poisson, gamma, or tweedie.

sample_rate: (GBM, DRF) Specify the row sampling rate (x-axis). The range is 0.0 to 1.0. Higher values may improve training accuracy. Test accuracy improves when either columns or rows are sampled. For details, refer to “Stochastic Gradient Boosting” (Friedman, 1999).

col_sample_rate: (GBM, DRF) Specify the column sampling rate (y-axis). The range is 0.0 to 1.0. Higher values may improve training accuracy. Test accuracy improves when either columns or rows are sampled. For details, refer to “Stochastic Gradient Boosting” (Friedman, 1999).

mtries: (DRF) Specify the columns to randomly select at each level. If the default value of

-1is used, the number of variables is the square root of the number of columns for classification and p/3 for regression (where p is the number of predictors).binomial_double_trees: (DRF) (Binary classification only) Build twice as many trees (one per class). Enabling this option can lead to higher accuracy, while disabling can result in faster model building. This option is disabled by default.

score_each_iteration: (K-Means, DRF, Naïve Bayes, PCA, GBM, GLM) To score during each iteration of the model training, check this checkbox.

k*: (K-Means, PCA) For K-Means, specify the number of clusters. For PCA, specify the rank of matrix approximation.

user_points: (K-Means) For K-Means, specify the number of initial cluster centers.

max_iterations: (K-Means, PCA, GLM) Specify the number of training iterations.

init: (K-Means) Select the initialization mode. The options are Furthest, PlusPlus, Random, or User.

Note: If PlusPlus is selected, the initial Y matrix is chosen by the final cluster centers from the K-Means PlusPlus algorithm.

tweedie_variance_power: (GLM) (Only applicable if Tweedie is selected for Family) Specify the Tweedie variance power.

tweedie_link_power: (GLM) (Only applicable if Tweedie is selected for Family) Specify the Tweedie link power.

activation: (DL) Select the activation function (Tanh, TanhWithDropout, Rectifier, RectifierWithDropout, Maxout, MaxoutWithDropout). The default option is Rectifier.

hidden: (DL) Specify the hidden layer sizes (e.g., 100,100). For Grid Search, use comma-separated values: (10,10),(20,20,20). The default value is [200,200]. The specified value(s) must be positive.

epochs: (DL) Specify the number of times to iterate (stream) the dataset. The value can be a fraction.

variable_importances: (DL) Check this checkbox to compute variable importance. This option is not selected by default.

laplace: (Naïve Bayes) Specify the Laplace smoothing parameter.

min_sdev: (Naïve Bayes) Specify the minimum standard deviation to use for observations without enough data.

eps_sdev: (Naïve Bayes) Specify the threshold for standard deviation. If this threshold is not met, the min_sdev value is used.

min_prob: (Naïve Bayes) Specify the minimum probability to use for observations without enough data.

eps_prob: (Naïve Bayes) Specify the threshold for standard deviation. If this threshold is not met, the min_sdev value is used.

compute_metrics: (Naïve Bayes, PCA) To compute metrics on training data, check this checkbox. The Naïve Bayes classifier assumes independence between predictor variables conditional on the response, and a Gaussian distribution of numeric predictors with mean and standard deviation computed from the training dataset. When building a Naïve Bayes classifier, every row in the training dataset that contains at least one NA will be skipped completely. If the test dataset has missing values, then those predictors are omitted in the probability calculation during prediction.

Advanced Options

fold_assignment: (GLM, GBM, DL, DRF, K-Means) (Applicable only if a value for nfolds is specified and fold_column is not selected) Select the cross-validation fold assignment scheme. The available options are Random or Modulo.

fold_column: (GLM, GBM, DL, DRF, K-Means) Select the column that contains the cross-validation fold index assignment per observation.

offset_column: (GLM, DRF, GBM) Select a column to use as the offset.

Note: Offsets are per-row “bias values” that are used during model training. For Gaussian distributions, they can be seen as simple corrections to the response (y) column. Instead of learning to predict the response (y-row), the model learns to predict the (row) offset of the response column. For other distributions, the offset corrections are applied in the linearized space before applying the inverse link function to get the actual response values. For more information, refer to the following link.

weights_column: (GLM, DL, DRF, GBM) Select a column to use for the observation weights. The specified

weights_columnmust be included in the specifiedtraining_frame. Python only: To use a weights column when passing an H2OFrame toxinstead of a list of column names, the specifiedtraining_framemust contain the specifiedweights_column.Note: Weights are per-row observation weights and do not increase the size of the data frame. This is typically the number of times a row is repeated, but non-integer values are supported as well. During training, rows with higher weights matter more, due to the larger loss function pre-factor.

loss: (DL) Select the loss function. For DL, the options are Automatic, Quadratic, CrossEntropy, Huber, or Absolute and the default value is Automatic. Absolute, Quadratic, and Huber are applicable for regression or classification, while CrossEntropy is only applicable for classification. Huber can improve for regression problems with outliers.

checkpoint: (DL, DRF, GBM) Enter a model key associated with a previously-trained model. Use this option to build a new model as a continuation of a previously-generated model.

use_all_factor_levels: (DL, PCA) Check this checkbox to use all factor levels in the possible set of predictors; if you enable this option, sufficient regularization is required. By default, the first factor level is skipped. For Deep Learning models, this option is useful for determining variable importances and is automatically enabled if the autoencoder is selected.

train_samples_per_iteration: (DL) Specify the number of global training samples per MapReduce iteration. To specify one epoch, enter 0. To specify all available data (e.g., replicated training data), enter -1. To use the automatic values, enter -2.

adaptive_rate: (DL) Check this checkbox to enable the adaptive learning rate (ADADELTA). This option is selected by default. If this option is enabled, the following parameters are ignored:

rate,rate_decay,rate_annealing,momentum_start,momentum_ramp,momentum_stable, andnesterov_accelerated_gradient.input_dropout_ratio: (DL) Specify the input layer dropout ratio to improve generalization. Suggested values are 0.1 or 0.2. The range is >= 0 to <1.

l1: (DL) Specify the L1 regularization to add stability and improve generalization; sets the value of many weights to 0.

l2: (DL) Specify the L2 regularization to add stability and improve generalization; sets the value of many weights to smaller values.

balance_classes: (GBM, DL) Oversample the minority classes to balance the class distribution. This option is not selected by default and can increase the data frame size. This option is only applicable for classification. Majority classes can be undersampled to satisfy the Max_after_balance_size parameter.

Note:

balance_classesbalances over just the target, not over all classes in the training frame.max_confusion_matrix_size: (DRF, DL, Naïve Bayes, GBM, GLM) Specify the maximum size (in number of classes) for confusion matrices to be printed in the Logs.

max_hit_ratio_k: (DRF, DL, Naïve Bayes, GBM, GLM) Specify the maximum number (top K) of predictions to use for hit ratio computation. Applicable to multinomial only. To disable, enter 0.

r2_stopping: (GBM, DRF) Specify a threshold for the coefficient of determination (r^2) metric value. When this threshold is met or exceeded, H2O stops making trees.

build_tree_one_node: (DRF, GBM) To run on a single node, check this checkbox. This is suitable for small datasets as there is no network overhead but fewer CPUs are used. The default setting is disabled.

rate: (DL) Specify the learning rate. Higher rates result in less stable models and lower rates result in slower convergence. Not applicable if adaptive_rate is enabled.

rate_annealing: (DL) Specify the learning rate annealing. The formula is rate/(1+rate_annealing value * samples). Not applicable if adaptive_rate is enabled.

momentum_start: (DL) Specify the initial momentum at the beginning of training. A suggested value is 0.5. Not applicable if adaptive_rate is enabled.

momentum_ramp: (DL) Specify the number of training samples for increasing the momentum. Not applicable if adaptive_rate is enabled.

momentum_stable: (DL) Specify the final momentum value reached after the momentum_ramp training samples. Not applicable if adaptive_rate is enabled.

nesterov_accelerated_gradient: (DL) Check this checkbox to use the Nesterov accelerated gradient. This option is recommended and selected by default. Not applicable is adaptive_rate is enabled.

hidden_dropout_ratios: (DL) Specify the hidden layer dropout ratios to improve generalization. Specify one value per hidden layer, each value between 0 and 1 (exclusive). There is no default value. This option is applicable only if TanhwithDropout, RectifierwithDropout, or MaxoutWithDropout is selected from the Activation drop-down list.

tweedie_power: (DL, GBM) (Only applicable if Tweedie is selected for Family) Specify the Tweedie power. The range is from 1 to 2. For a normal distribution, enter

0. For Poisson distribution, enter1. For a gamma distribution, enter2. For a compound Poisson-gamma distribution, enter a value greater than 1 but less than 2. For more information, refer to Tweedie distribution.score_interval: (DL) Specify the shortest time interval (in seconds) to wait between model scoring.

score_training_samples: (DL) Specify the number of training set samples for scoring. To use all training samples, enter 0.

score_validation_samples: (DL) (Requires selection from the validation_frame drop-down list) This option is applicable to classification only. Specify the number of validation set samples for scoring. To use all validation set samples, enter 0.

score_duty_cycle: (DL) Specify the maximum duty cycle fraction for scoring. A lower value results in more training and a higher value results in more scoring. The value must be greater than 0 and less than 1.

autoencoder: (DL) Check this checkbox to enable the Deep Learning autoencoder. This option is not selected by default.

Note: This option requires a loss function other than CrossEntropy. If this option is enabled, use_all_factor_levels must be enabled.

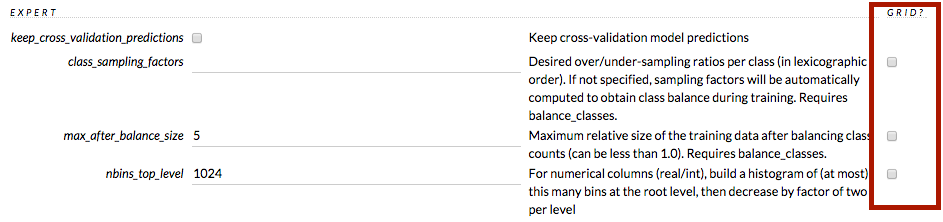

Expert Options

keep_cross_validation_predictions: (GLM, GBM, DL, DRF, K-Means) To keep the cross-validation predictions, check this checkbox.

class_sampling_factors: (DRF, GBM, DL) Specify the per-class (in lexicographical order) over/under-sampling ratios. By default, these ratios are automatically computed during training to obtain the class balance. This option is only applicable for classification problems and when balance_classes is enabled.

overwrite_with_best_model: (DL) Check this checkbox to overwrite the final model with the best model found during training. This option is selected by default.

target_ratio_comm_to_comp: (DL) Specify the target ratio of communication overhead to computation. This option is only enabled for multi-node operation and if train_samples_per_iteration equals -2 (auto-tuning).

rho: (DL) Specify the adaptive learning rate time decay factor. This option is only applicable if adaptive_rate is enabled.

epsilon: (DL) Specify the adaptive learning rate time smoothing factor to avoid dividing by zero. This option is only applicable if adaptive_rate is enabled.

max_w2: (DL) Specify the constraint for the squared sum of the incoming weights per unit (e.g., for Rectifier).

initial_weight_distribution: (DL) Select the initial weight distribution (Uniform Adaptive, Uniform, or Normal). If Uniform Adaptive is used, the initial_weight_scale parameter is not applicable.

initial_weight_scale: (DL) Specify the initial weight scale of the distribution function for Uniform or Normal distributions. For Uniform, the values are drawn uniformly from initial weight scale. For Normal, the values are drawn from a Normal distribution with the standard deviation of the initial weight scale. If Uniform Adaptive is selected as the initial_weight_distribution, the initial_weight_scale parameter is not applicable.

classification_stop: (DL) (Applicable to discrete/categorical datasets only) Specify the stopping criterion for classification error fractions on training data. To disable this option, enter -1.

max_hit_ratio_k: (DL, GLM) (Classification only) Specify the maximum number (top K) of predictions to use for hit ratio computation (for multinomial only). To disable this option, enter 0.

regression_stop: (DL) (Applicable to real value/continuous datasets only) Specify the stopping criterion for regression error (MSE) on the training data. To disable this option, enter -1.

diagnostics: (DL) Check this checkbox to compute the variable importances for input features (using the Gedeon method). For large networks, selecting this option can reduce speed. This option is selected by default.

fast_mode: (DL) Check this checkbox to enable fast mode, a minor approximation in back-propagation. This option is selected by default.

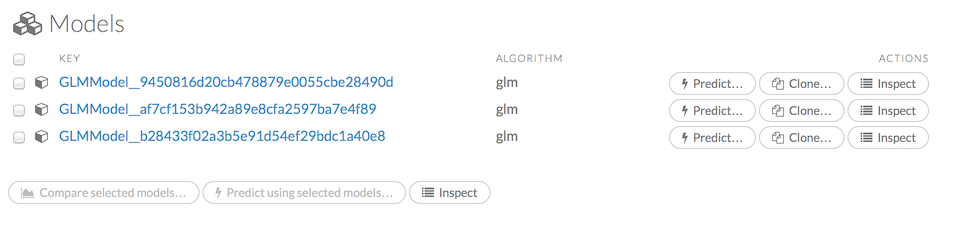

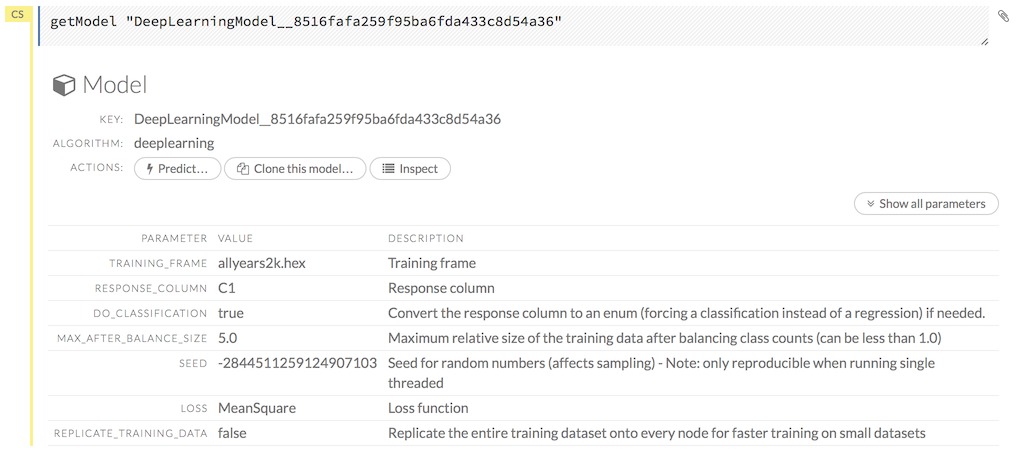

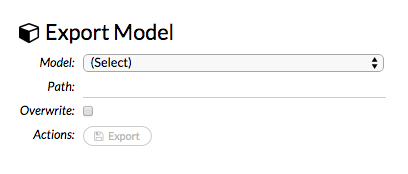

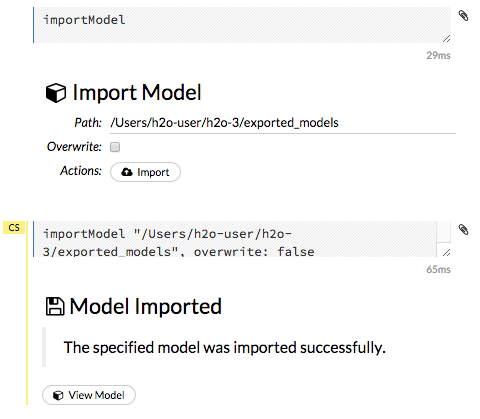

force_load_balance: (DL) Check this checkbox to force extra load balancing to increase training speed for small datasets and use all cores. This option is selected by default.